Can AI Really Predict and Prevent Future Crimes

9 min read Explore how AI is transforming crime prediction and prevention, revealing potential and challenges in modern criminology. (0 Reviews)

Can AI Really Predict and Prevent Future Crimes?

In a world where technology infiltrates every aspect of our lives, the question isn’t just if artificial intelligence (AI) can fight crime—it’s whether it can anticipate and stop criminal acts before they happen. This notion, once the stuff of science fiction, is edging closer to reality. But how reliable is AI in predicting human behavior? Are we ready—technologically, legally, and ethically—to entrust machines with such power? Let’s embark on a detailed exploration to demystify AI’s potential and pitfalls in predicting and preventing future crimes.

Introduction

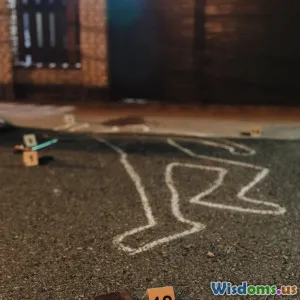

The concept of predicting crime ahead of time isn’t new. The idea peeked into the public imagination via films like Minority Report, where “Pre-Cogs” foresee crimes before they happen. Today, AI-based predictive policing algorithms aim to translate fiction into fact by analyzing enormous datasets to spot patterns that foreshadow criminal acts. But while these technologies hold promise, the realities are more nuanced and intricate.

Understanding AI’s role in crime prevention is crucial as law enforcement agencies, policymakers, and society grapple with balancing security, privacy, and justice.

What is Predictive Policing?

Predictive policing involves the use of analytics, machine learning algorithms, and historical crime data to forecast where and when crimes are likely to occur and even identify potential offenders. It generally operates through two main approaches:

-

Forecasting Crime Locations: Using crime hot spot analysis, reports, weather, time, and socioeconomic data to determine which neighborhoods might experience increased criminal activity.

-

Predicting Potential Offenders: Algorithms attempt to identify individuals at risk of offending or reoffending based on behavioral data, social connections, and prior records.

Real-World Examples

-

PredPol: One of the earliest commercially available predictive policing software, PredPol analyzes location, time, and type of recent crimes to highlight areas with high risks. U.S. police departments, including in Los Angeles and Atlanta, have employed PredPol to allocate resources effectively.

-

Chicago’s Strategic Subject List: Developed by the Chicago Police Department, this system uses machine learning to score individuals' likelihood of being involved in violent crimes, either as perpetrator or victim.

These systems aim to shift policing from reactive to proactive, optimizing limited resources.

How AI Predicts Crime: The Technology Behind It

Artificial intelligence, particularly machine learning, thrives on pattern recognition. Crime often follows patterns—temporal, spatial, social. By feeding AI historic crime data, social media behavior, economic indicators, and even weather records, predictive models identify statistical correlations not easily visible to humans.

Techniques include:

-

Data Mining and Pattern Recognition: Sifting through vast pools of structured and unstructured data.

-

Social Network Analysis: Mapping relationships and interactions to identify potential gang activity or radicalization.

-

Natural Language Processing (NLP): Scrutinizing language on social media or intercepted communications to detect threats or signals of violent intent.

The predictive strength generally hinges on data quality, diversity, and how well the system adapts to changes.

Successes and Case Studies

While still emerging, several agencies report promising results:

-

Los Angeles Police Department (LAPD): Using PredPol, LAPD noted reductions in property crimes in specific hot spots, allowing redeployment of officers.

-

Houston Crime Reduction: A 2017 pilot study using AI-enriched data showed a 19% drop in violent crime in targeted neighborhoods.

-

UK Metropolitan Police: Incorporates AI to analyze burglary and violent offense patterns, improving patrol efficiency.

These examples reflect AI’s potential to augment human decision-making, not replace it.

Challenges and Ethical Concerns

1. Biased Data and Discrimination

AI systems are only as unbiased as their input data. Historic crime data often reflects structural inequalities, over-policing, or underreporting in marginalized communities. When AI trains on these datasets, it risks perpetuating or exacerbating racial profiling and unjust targeting.

Example: A 2016 investigative report by ProPublica revealed biases in the COMPAS system used for recidivism prediction, consistently overestimating risk among African American defendants.

2. **Privacy and Surveillance

** The extensive collection and analysis of data—sometimes including social media posts, cellphone tracking, and public surveillance—intrude on privacy rights, sparking debates on how far law enforcement should go.

3. Overreliance and Automation Bias

Police may overtrust AI recommendations, sidelining their discretion essential in contextual human judgment.

4. Transparency and Accountability

Many predictive policing models operate as black boxes, making it difficult for external parties to audit and question decisions impacting civil liberties.

Can AI Prevent Crime or Just Predict?

The line between prediction and prevention is slim yet critical. Predictive tools can inform law enforcement about "when and where" crimes may happen, but prevention requires action beyond forecasts. Key factors include community engagement, appropriate intervention programs, and judicial oversight.

Examples of Preventive Actions

- Police increasing visible presence in predicted hot spots to deter crime.

- Social services intervening with individuals flagged at risk to provide counseling or job training.

- Targeted community outreach and conflict resolution initiatives driven by AI intelligence.

However, ensuring these actions do not criminalize potential behavior without cause remains paramount.

The Future of AI in Crime Prediction and Prevention

Toward Ethical and Transparent AI

Researchers emphasize the importance of building fairer algorithms:

- Involving multidisciplinary teams—criminologists, ethicists, technologists.

- Auditing datasets for bias.

- Deploying explainable AI models that justify their predictions.

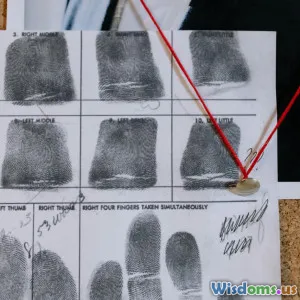

Integration with Crime Investigation Tools

AI-assisted video analysis, facial recognition, and behavioral prediction could work hand-in-hand with traditional investigation to speed case resolution.

Legal and Policy Frameworks

Globally, lawmakers are called to create regulations that ensure AI systems uphold human rights, with oversight mechanisms to prevent misuse.

Community-Centered Approaches

Innovative programs blending AI insights with community policing may foster trust and more effective crime prevention.

Conclusion

Artificial intelligence holds transformative potential in criminology by predicting patterns and enabling proactive crime prevention. Real-world applications demonstrate promising improvements in resource allocation and early intervention. However, effective and just deployment demands cautious addressing of ethical dilemmas, bias mitigation, transparency, and respect for civil liberties.

Ultimately, AI cannot alone build safer societies; it must work as a tool paired with human judgment, societal values, and robust governance. A future where crimes are anticipated and curtailed ethically is possible—but only if technology serves humanity without undermining its fundamental rights.

References

- Lum, K., & Isaac, W. (2016). To predict and serve? Significance Magazine.

- Perry et al. (2013). Predictive Policing: The Role of Crime Forecasting in Law Enforcement Operations.

- ProPublica. (2016). Machine Bias: There’s software used across the country to predict future criminals.

- Ferguson, A. G. (2017). The rise of big data policing: Surveillance, race, and the future of law enforcement.

Rate the Post

User Reviews

Popular Posts