How SelfDriving Cars Tackle Pedestrian Surprises on City Streets

15 min read Explore how self-driving cars detect and react to unexpected pedestrian movements on busy city streets using advanced sensors and AI. (0 Reviews)

How Self-Driving Cars Tackle Pedestrian Surprises on City Streets

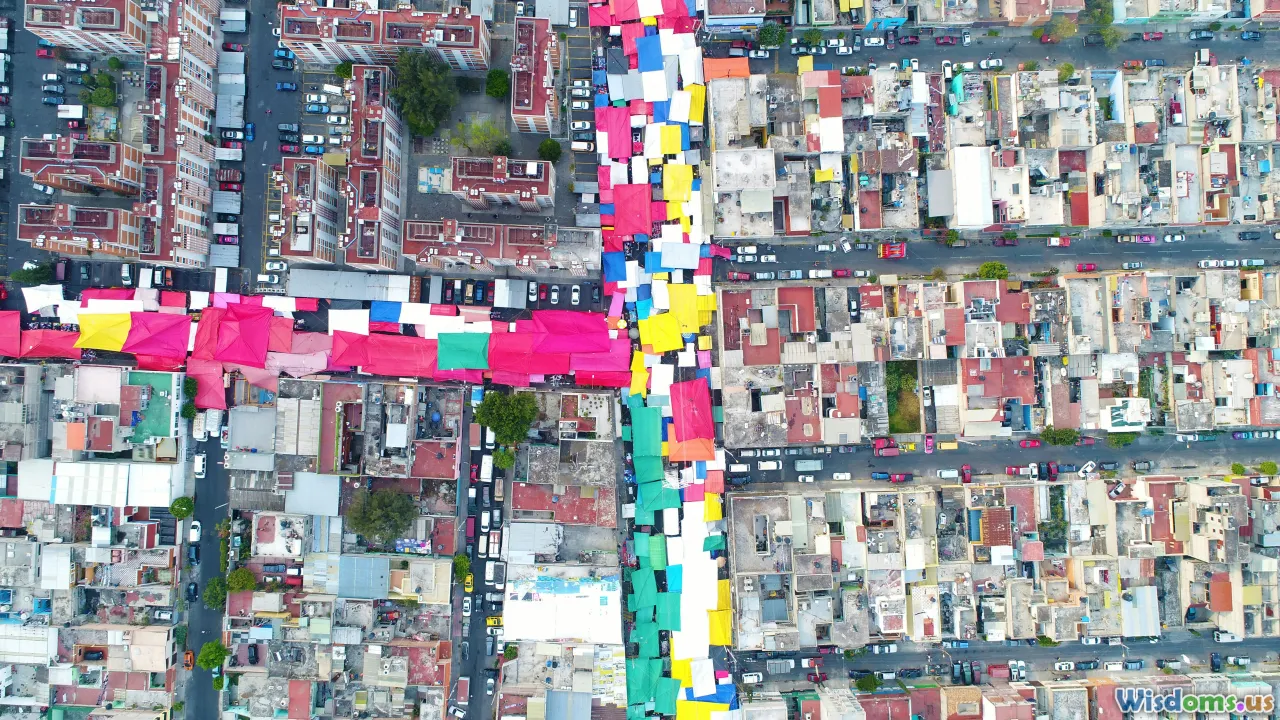

Navigating vibrant city streets is challenging for human drivers. For self-driving cars, it’s a monumental test of engineering and artificial intelligence. Pedestrians are unpredictable: children dart after a stray ball, commuters jaywalk between honking traffic, and everyday distractions lead people to step into streets unexpectedly. The cutting-edge systems in autonomous vehicles must decode and interpret these chaotic scenarios every second. How do they rise to this challenge? Let’s dive deep into how self-driving cars anticipate and handle pedestrian surprises on bustling urban roads.

Multi-Layered Sensor Fusion: The Digital "Senses"

Self-driving cars rely on a suite of sensors to perceive their surroundings. Typically, these include lidar (light detection and ranging), radar, cameras, ultrasonic sensors, and sometimes even thermal imaging. This sensor array acts as the vehicle’s eyes and ears—but with superhuman capabilities.

- Lidar rapidly scans the environment, generating a precise 3D map. Its pulses reflect off surfaces, mapping the subtle contour of a curb or the flutter of a pedestrian's jacket.

- Cameras capture rich, color-detailed images essential for recognizing crosswalks, hand gestures, or sudden motions.

- Radar pierces through fog, dust, or rain, reliably detecting objects (even those partially hidden).

- Ultrasonic sensors assist at low speeds and in tight spaces, making them useful for hearing a tapping cane or tracking people just out of direct line of sight.

A practical illustration: Consider Waymo’s vehicle passing through an intersection in Phoenix. Lidar identifies a child’s small silhouette moving rapidly from among parked cars, while radar confirms the moving object isn’t a stray shopping cart. Cameras analyze it’s a human, likely a child, and ultrasonic sensors watch for any bystanders up close. Only by fusing all sources can the autonomous car build a robust, nuanced "mental model" of its rapidly changing environment.

Predictive Behavior Modeling: Reading the Unpredictable

Recognizing a pedestrian is just the first step. The real feat is predicting what they’ll do next. Modern autonomous vehicles use advanced machine learning, specifically deep neural networks, for behavior prediction. These algorithms are trained on vast urban video datasets, learning the patterns in pedestrian movement:

- Gaze direction: Is a pedestrian looking at the car, or immersed in their phone? A study by MIT’s CSAIL found gaze prediction cuts accident risk significantly, and systems like Mobileye’s harness this feature.

- Body posture and intent: Are someone’s shoulders angled for a dash, or are they stepping back? Human drivers rely on gut instinct, shaped by experience; AVs simulate it using statistical models trained on millions of city scenarios.

- Trajectory tracking: The car not only sees where a person is but constantly calculates, with each millisecond, where they are likely to head.

Actionable innovation: In San Francisco, Cruise’s AVs benefit from an open-loop predictive engine that updates path probabilities as more sensor data arrives. If a distracted jogger appears likely to run into the road, the car can preemptively reduce speed and change lanes well before a collision could occur.

Real-Time Decision Making: Microsecond Reactions That Save Lives

Situations change quickly on city streets. A split-second delay can be catastrophic. Self-driving cars must execute complex reasoning instantaneously, mapping predictions to action. Here’s how the tech delivers on this.

- Redundancy is key: If one sensor fails or contradicts another (imagine a glare-blinded camera), the safety system defers to radar or lidar to confirm pedestrian presence.

- Emergency protocols: Enhanced automatic emergency braking (AEB) activates not just for other vehicles but to protect humans. AVs are now programmed to anticipate the possibility of someone stepping off a curb, even mid-block, and to apply brakes if probability thresholds are exceeded.

- Bypassing ambiguity: Split-second decisions aren’t just about braking. Sometimes, swerving or adjusting trajectory is safer, provided it doesn't endanger others.

In late 2023, a Zoox vehicle in Las Vegas averted disaster when a skateboarder suddenly rolled off the sidewalk. Detecting an unusual, rapidly approaching shape, the system braked sharply, then stopped as safe buffer zones were calculated. All occurred too quickly for a human driver’s typical response time.

Handling the Unexpected: From Balloons to Costumed Characters

City streets are never boring. Parades, street fairs, or mere quirks introduce anomalies: mascots, stilt walkers, floating balloons, or pets in costumes. How do AVs distinguish true threats from harmless eccentricities?

- Extensive databases: AI models are trained on a veritable zoo of scenarios—videos and images of not only people, but unusual costumes and animals. NVIDIA’s research, for instance, fed its Drive platform with data from over fifty countries to account for cultural and seasonal oddities.

- Object classification layers: Neural networks first decide "is this a moving object?" Next: "is it on a collision course?" Third: "does it have behaviors typical of a pedestrian?" Until certainty is reached, the vehicle treats any ambiguity cautiously.

- Continuous updates: AV companies use fleet networks to learn from rare events. If a vehicle encounters a Santa Claus in June, its data gets uploaded and analyzed—enriching the company’s overall model.

Take the annual New York Halloween parade. Waymo cars, tested with hundreds of abnormal pedestrian profiles, successfully navigated zombie parades without confusing them for animals or discarding them as false alarms. These sophisticated classifications prevent both overreaction (slamming brakes for harmless inflatable art) and underreaction (missing a real person disguised in a novel way).

Adapting to Urban Patterns: Local Intelligence at Play

City streets aren’t all built the same. Self-driving cars face unique spatial puzzles: faded crosswalks in Boston, treacherous five-way intersections in Los Angeles, or unmarked school zones in Tokyo. Local intelligence is crucial for anticipating non-standard pedestrian behaviors.

- Crowdsourcing maps: Detailed, adaptive high-definition maps guided by vehicle fleets catalogue curious features—the sidewalk gap that causes jaywalking, or the bus stop where commuters routinely dash across lanes.

- Temporal behavior mapping: Systems anticipate patterns tied to the time of day or season, such as children flooding a crossing at school release time, or surge of tourists near stadiums.

- Dynamic route planning: Vehicles consider pedestrian density when calculating the safest and smoothest route.

Example in practice: Tesla’s "shadow mode" learns from its entire vehicle fleet. If a dozen cars encounter problematic pedestrian flows in a market district, those findings enhance real-time local prediction for every Tesla in the area.

Ethical and Social Dimensions: Pedestrian Trust and City Life

Self-driving cars do not just encounter technical challenges—they must win the trust of pedestrians. People change their behavior depending on their perceptions. This introduces new urban dynamics:

- Communication cues: Some AVs are experimenting with external displays, lights, or even sounds to signal intent to pedestrians. For example, Ford has tested visual displays to indicate “I will yield to you” messages across the front windshield.

- Ethical decision-making frameworks: Engineers face difficult programming choices: Should a car prioritize passenger safety over that of nearby pedestrians in a forced trade-off? Several high-profile studies, such as the MIT Moral Machine project, openly debate these choices.

- Impact on urban design: Cities are beginning to factor AVs into infrastructure upgrades, rethinking crosswalks, pedestrian priority zones, and signage to complement autonomous technology.

In London, trial AVs piloted cooperation with city “smart street” pilots. Pedestrian crossings featured IoT-connected beacons, sending real-time movement and density data to passing vehicles, further improving reaction time and safety harmony.

The Learning Never Stops: Continuous Improvement Through Data

Self-driving cars thrive on data-driven improvement. Each ride generates a wealth of information, enabling refinements day after day.

- Over-the-air (OTA) updates: Companies deploy improvements like bug fixes, upgraded models, and new city-specific behaviors straight to cars after analyzing trip data.

- Simulation environments: Software like DeepDrive or Carla can create infinitely variable urban scenarios—introducing new forms of pedestrian mischief, crowd dynamics, or weather effects—where virtual cars practice thousands of rare encounter simulations.

- Transparency and feedback loops: Manufacturers like Waymo and Cruise publish safety reports, sharing how their vehicles respond to outlier pedestrian incidents, fostering public trust and regulatory oversight.

An example: In 2022, after repeated near-misses with e-scooter riders in Paris, several AV operators updated their models within weeks, enhancing scooter-detection and reaction protocols across their entire global AV fleets. Such rapid adaptation was unthinkable a decade ago.

Practical Tips for Urban Pedestrians in a Self-Driving Future

As AVs become a fixture on city roads, there’s a new etiquette for safe coexistence. Pedestrians can take steps to ensure they’re seen and safe:

- Maintain eye contact and signal intentions where feasible. Machines now read gaze and body language, but overt gestures make detection more robust.

- Use crosswalks deliberately, especially where AVs are being tested—crossing mid-block invites uncertainty for both cars and people.

- Avoid sudden or erratic actions near the street’s edge—AVs excel with predictable movement but may misclassify abrupt changes as emergencies.

- Report strange encounters wherever possible. Cities and AV companies offer hotlines and feedback apps to help improve performance and flag emerging hazards.

Local governments can contribute by updating signage, encouraging AV awareness campaigns, and working with private partners to integrate smart city solutions that mutually benefit all road users.

The quest to safely navigate the unpredictability of urban pedestrians is relentlessly pushing self-driving car technology forward. What once seemed a city commuter’s headache now fuels advanced perception, ethical innovation, and new urban design thinking. As cities and autonomous vehicles move together into the future, the lesson is clear: where pedestrians lead, technology—and society—must follow with care, intelligence, and respect.

Rate the Post

User Reviews

Popular Posts