The Ethics of Future Warfare

9 min read Explore the complex ethics of future warfare shaped by cutting-edge military technology and autonomous weapons. (0 Reviews)

The Ethics of Future Warfare: Navigating the Moral Terrain of Military Technology

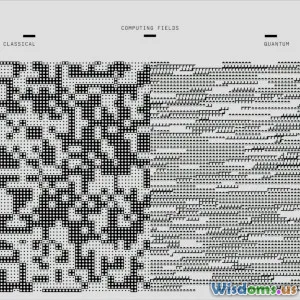

War has always pushed the boundaries of technology, but as we approach an era dominated by artificial intelligence, autonomous weapons, and cyber warfare, ethical questions about the nature and conduct of future conflicts become more pressing than ever. The fusion of cutting-edge technology with military power challenges traditional norms of accountability, human control, and the very definition of combat.

Introduction: Entering the Age of Ethical Complexity

Imagine a battlefield where drones fly, target, and fire without human intervention, or where intelligent algorithms decide who lives and who dies within milliseconds. This isn't science fiction—military technology is rapidly evolving toward autonomy and increasing lethality. While these advances promise tactical advantages and reduced human soldier casualties, they simultaneously provoke a crucial ethical debate: Should machines wield the power of war?

Philosopher Peter Singer warns that autonomous weapons could "change the face of war, making it more efficient but also potentially more terrifying," raising fundamental questions about morality, law, and humanity’s role in warfare. This article explores these challenges, providing readers with a thorough understanding of the ethical landscape shaping the future of warfare.

The Rise of Autonomous Weapons: Benefits and Risks

What Are Autonomous Weapons?

Autonomous weapon systems (AWS) are military robots capable of selecting and engaging targets without human input. Examples include drones like the Turkish Bayraktar TB2, which combines remote control with autonomous target identification capabilities, and experimental platforms like the U.S. Navy’s Sea Hunter, an unmanned surface vessel designed for anti-submarine warfare.

Tactical Advantages

Proponents highlight that AWS can reduce soldier casualties by performing high-risk missions, operate tirelessly, and instantly process battlefield data beyond human abilities. For instance, AI-powered surveillance drones play a crucial role in modern counterterrorism operations by enabling persistent monitoring without risking pilot lives.

Ethical Risks

However, relinquishing lethal decisions to machines introduces grave ethical concerns:

- Accountability: Who is responsible if an autonomous drone mistakenly kills civilians? Traditional war crimes frameworks depend on clear lines of responsibility.

- Discrimination and Proportionality: Can AI reliably distinguish combatants from non-combatants under complex battlefield scenarios?

- Dehumanization: Removing human judgment risks desensitizing warfare, potentially lowering thresholds for conflict initiation.

Accountability in Future Conflicts

Holding actors accountable is a cornerstone of war ethics and international law. The introduction of autonomous systems blurs these lines:

-

Operator Responsibility: When humans remotely deploy AWS, they act as decision-makers. Yet as autonomy increases, operator control diminishes, complicating culpability.

-

Programmers and Manufacturers: Could AI developers or manufacturers be held liable? Currently, legal systems hesitate to assign legal blame to algorithms or their creators.

-

State Responsibility: Ultimately, states deploying AWS are accountable under international laws; however, attribution may be challenging if systems malfunction.

A landmark example is the 2020 United Nations discussions on Lethal Autonomous Weapons Systems (LAWS), recognizing the "responsibility gap" that technology introduces. Many experts call for legally binding treaties akin to chemical and biological weapon bans to avert accountability vacuums.

Civilian Protection and Compliance with International Humanitarian Law

International Humanitarian Law (IHL) mandates protecting civilians and ensuring proportional use of force. How do future weapons adhere to these principles?

-

Discrimination Algorithms: There are ongoing efforts to develop AI capable of high-fidelity target discrimination, integrating sensors and real-time data. Yet experts like Stuart Russell highlight that no known algorithm can consistently replicate human empathy or judgment in chaotic combat environments.

-

Proportionality Calculations: AWS would need to weigh military advantage against collateral damage dynamically—a profoundly complex task. Any miscalculation could lead to unlawful attacks.

-

Examples in Conflicts: The U.S. military uses the MQ-9 Reaper drone, combining human operation with semi-autonomous features, but controversies over civilian casualties persist, underscoring limitations.

Consequently, many ethicists argue that fully autonomous engagement is premature without rigorous safeguards and that human oversight is imperative.

Psychological and Societal Implications

Beyond battlefield ethics, future warfare technologies influence public perception and societal values:

-

War Perception: Autonomous weapons may make war seem less ‘costly’ for decision-makers, potentially increasing the propensity to resort to violent solutions over diplomacy.

-

Combatant Psychology: Soldiers facing or commanding autonomous systems face unique psychological stresses, including trust issues and moral injury related to remote killings.

-

Global Arms Race: The pursuit of advanced war technology can destabilize geopolitical balances, fueling arms races and undermining global security.

Cyber Warfare and Non-Kinetic Ethics

Future warfare isn't only physical; cyber operations blur ethical lines further:

- Cyberattacks that disable infrastructure without physical harm don’t fit neatly into traditional frameworks of war and morality.

- The attribution problem is acute in cyberwarfare, complicating responses and deterrence.

For example, the 2010 Stuxnet attack on Iran’s nuclear program showcased a highly targeted cyber-weapon causing physical destruction indirectly, raising questions about proportionality and collateral damage in cyber domains.

Towards an Ethical Framework: Recommendations and Calls to Action

International Regulation and Treaties

Global cooperation is essential. Efforts like the Campaign to Stop Killer Robots advocate for preemptive bans on fully autonomous lethal weapons.

Human-in-the-Loop Systems

Maintaining meaningful human control over weapons deployment is often proposed as an ethical minimum to preserve moral judgment and accountability.

Transparency and Public Engagement

Governments and military bodies should disclose autonomous weapons policies and involve the global public in ethical debates.

Robust Testing and Ethical AI Development

AI systems require stringent testing and oversight, incorporating ethical principles during design to ensure compliance with IHL.

Conclusion: Balancing Innovation and Humanity

Military technology will inevitably advance, but the integration of AI and autonomy in warfare demands a reassessment of ethical boundaries. Balancing the pragmatism of enhanced defense capabilities with the preservation of human dignity, accountability, and civilian protection is one of the 21st century’s most critical challenges.

The stakes are high: the choices made today about how, when, and if autonomous weapons are developed and deployed will influence global security and ethical norms for generations. Thoughtful governance, transparent dialogue, and international cooperation are vital to ensuring that technology serves humanity without sacrificing its moral compass.

“The question about ethical AI in warfare is not 'if' but 'how' we control it,” notes AI ethicist Wendell Wallach. The future of warfare will test not only technological ingenuity but humanity’s ability to wield power responsibly.

Rate the Post

User Reviews

Popular Posts