Autonomous Weapons Ethics Making Sense of Global Regulations

15 min read Explore the ethical challenges and international regulatory approaches surrounding autonomous weapons in modern warfare. (0 Reviews)

Autonomous Weapons Ethics: Making Sense of Global Regulations

As advancements in artificial intelligence (AI) and military technology accelerate, autonomous weapons—systems operating without direct human control—are poised to reshape modern warfare. However, the promise of increased battlefield efficiency comes hand-in-hand with ethical dilemmas, legal uncertainties, and mounting international debate. How are nations, policymakers, and global bodies attempting to govern these powerful yet controversial tools? Understanding the whirlwind of perspectives, regulations, and challenges is key to navigating the evolving landscape of autonomous weapon ethics.

What Are Autonomous Weapons? Unpacking the Technology

Autonomous weapons, often called Lethal Autonomous Weapons Systems (LAWS), refer to machines capable of identifying, selecting, and attacking targets with minimal or no human intervention. Examples include armed drones, AI-powered missile defense systems, robotic tanks, and unmanned underwater vehicles.

Key Characteristics:

- Sensors and AI Algorithms: These technologies allow such weapons to detect and distinguish targets based on appearance, behavior, or location.

- Levels of Autonomy: Systems range from remotely operated (humans at the control), semi-autonomous (requiring human confirmation before lethal action), to fully autonomous (machines make lethal decisions themselves).

Real-World Examples

- Drone Swarms: In 2021, Turkish manufacturer STM reportedly deployed drone swarms in Syria capable of collaborative movement and target selection using AI.

- Harop Drones: Used by Azerbaijan in the 2020 Nagorno-Karabakh conflict, these loitering munitions can autonomously track and strike radar-emitting targets.

The underlying dilemma: how much decision-making should humanity delegate to machines, especially regarding life-and-death situations?

Ethical Flashpoints: Human Judgment vs. Machine Decision

Ethical debates about autonomous weapons often boil down to two pillars: accountability and moral agency.

The Crisis of Accountability

How can one assign responsibility if an autonomous system erroneously strikes civilians? Traditional warfare relies on human judgment, command chains, and established accountability. By contrast, when a weapon's decision pathway is buried within complex AI algorithms, pinning responsibility becomes murky.

- Case in Point: In the 2021 United Nations (UN) report, analysts struggled to determine liability after a Turkish drone—reportedly using autonomous capabilities—struck fighters in Libya, raising questions about war crimes and legal repercussions.

The Value of Human Override

Ethicists like Noel Sharkey, co-founder of the International Committee for Robot Arms Control (ICRAC), argue for the necessity of human control, especially in the “kill chain.” The rationale:

- Humans can weigh contextual factors, intent, and proportionality far better than algorithms.

- Moral agency, a distinctly human trait, is essential when life and death are at stake.

Survey data supports this concern. A 2019 Ipsos poll found 61% of global respondents opposed fully autonomous weapons, saying only humans should decide to take a life.

Global Regulatory Approaches: Patchwork or Progress?

Unlike nuclear, biological, or chemical weapons, there is no comprehensive international treaty governing autonomous weapons. Instead, regulation efforts are shaped by fragmented initiatives, voluntary guidelines, and divergent national interests.

Conventional Weapons Conventions

The UN Convention on Certain Conventional Weapons (CCW) hosts annual discussions on LAWS but consensus is elusive. Russia, the US, Israel, and China have all signaled reluctance to pursue binding bans, citing national security interests and an unwillingness to cede technological ground.

Regional and National Stances

- Europe: The European Parliament passed a 2018 resolution seeking a ban on LAWS—urging "meaningful human control" and moral oversight. However, implementation across member states remains inconsistent.

- US and China: Both are reluctant to agree to outright bans, emphasizing "responsible use." The US Department of Defense’s 3000.09 directive requires human involvement in deliberate lethal decisions, but ambiguities remain.

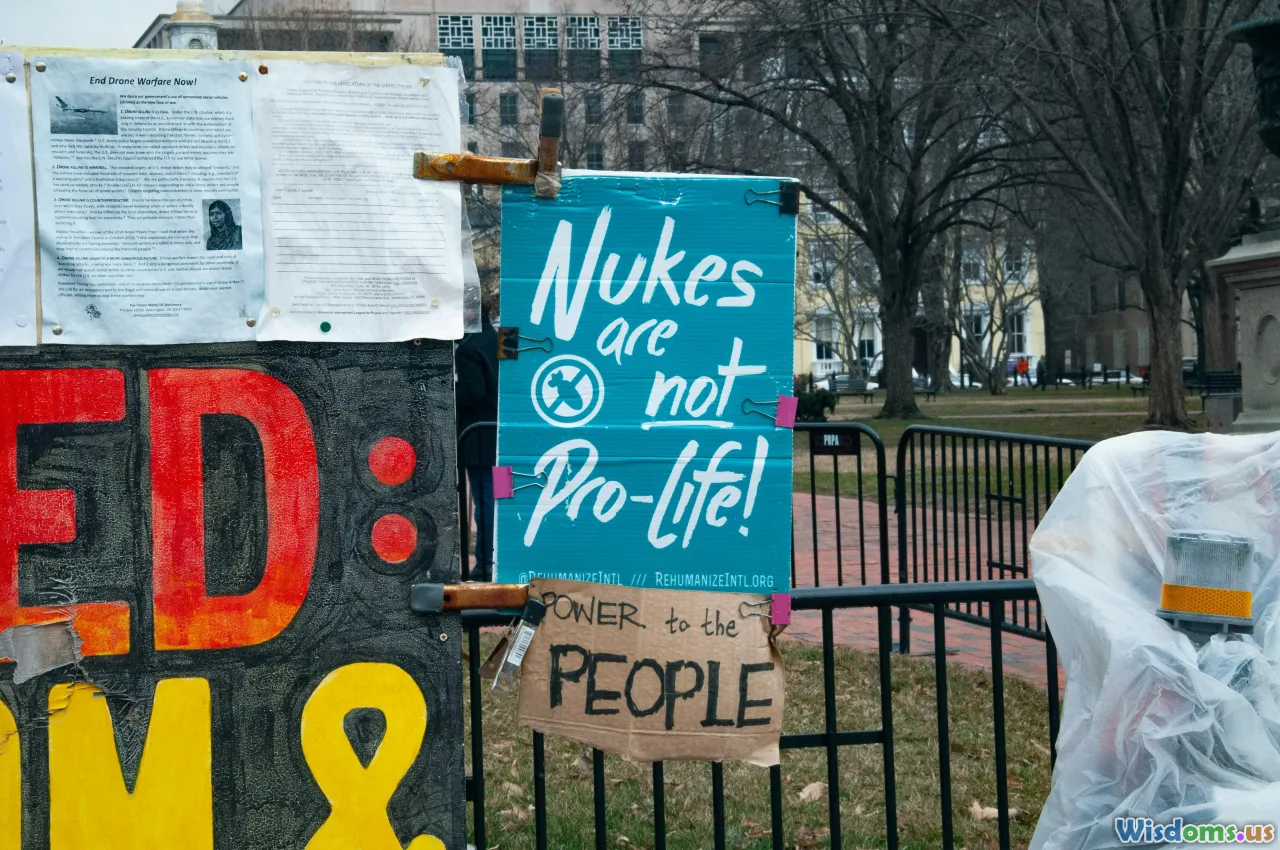

- Ban Advocates: 30 countries, mostly in Latin America and Africa, push for a legally binding treaty prohibiting LAWS, echoing civil society calls from groups like the Campaign to Stop Killer Robots.

The state of play: regulation is largely voluntary, with technical standards lagging behind proliferating deployment.

Legal Challenges: Can the Laws of War Keep Up?

Existing frameworks, primarily the Geneva Conventions and international humanitarian law (IHL), are meant to protect civilians, prohibit indiscriminate attacks, and require proportionality in armed conflict. Autonomous weapons complicate these rules.

Legal Void or Adaptable Precedent?

- Distinction: IHL requires combatants to distinguish between military targets and civilians. Can machines consistently make this moral and legal distinction, especially in densely populated areas?

- Proportionality and Precaution: Algorithms may not adequately grasp nuances: how much collateral damage is acceptable? Pre-set programming rarely matches real-world complexity.

- War Crimes Prosecution: If things go awry—suggesting a crime—who stands trial? The commander who ordered the deployment, the developer, the manufacturer, or the machine itself?

While some legal scholars argue IHL principles can extend to new technologies, the pace of technical innovation risks outstripping jurisprudence, leaving dangerous grey zones.

National Security Dilemmas: Deterrence, Arms Race, and Escalation Risks

Advocates claim autonomous weapons could reduce casualties for deploying states and increase deterrence against aggression. Yet, critics argue they encourage arms races, lower the threshold for conflict, and complicate escalation control.

The AI Arms Race

Global powers are pouring billions into AI-based military research. In 2023, the US allocated over $1.7 billion for AI-enabled military systems, while China’s PLA has added swarms of autonomous drones to its arsenal.

- Drone Swarms: Capable of overwhelming defenses or operating in contested spaces like the South China Sea, swarms add uncertainty and increase first-strike incentives.

- Risk of Accidental War: Autonomous systems may misinterpret signals or behave unpredictably; Russia’s Perimeter system, for instance, can automatically launch nuclear missiles if it senses a “decapitation strike.”

Blurring Lines Between Peace and War

Unlike traditional weapons, autonomous systems can be rapidly reprogrammed, mass-produced, and anonymously deployed, making attribution and escalation control harder.

Human Oversight: Meaningful or Marginal?

“Meaningful human control” is a term gaining currency among experts and diplomats. But what does it really mean, and how can it be assured?

Actionable Steps for Retaining Oversight

- Human-in-the-Loop: Ensure humans make final go/no-go decisions, especially for life-or-death actions.

- Training and Certification: Standardize training curriculums for operators, emphasizing legal, ethical, and technical literacy.

- Transparent Design: Incentivize explainable AI, allowing for audits and reconstructing decision processes post-operation.

- Robust Fail-safes: Design systems to allow rapid remote shutdowns or overrides if they behave erratically.

✅ Best Practice: The UK’s Ministry of Defence requires a “kill switch” in all autonomous weapon prototypes, aligning system design with legal and moral expectations.

However, ensuring oversight is not just technical—operational stress, cyber threats, and machine unpredictability can erode even well-designed controls.

Civil Society and Advocacy: Shaping the Norms

While governments and militaries wrestle with policy, non-governmental groups and technologists play a pivotal role in highlighting risks and proposing solutions.

The Campaign to Stop Killer Robots

Since 2012, this coalition has:

- Successfully brought the issue to the UN agenda�a- Developed model policy frameworks for national legislators

- Partnered with prominent AI researchers, gaining endorsements from Elon Musk, Stephen Hawking, and hundreds of academics

Shifting the Conversation

- Public Engagement: Documentaries, classroom curricula, and exhibitions spark informed debate, shifting public opinion toward precautionary regulation.

- Industry Responsibility: In 2018, Google’s staff protested "Project Maven," forcing the company to restrict AI for military use in future contracts.

Civil society, far from relegated to the sidelines, consistently shapes regulatory discourse by holding developers and decision-makers to elevated standards.

Innovation vs. Ethics: The Dual-Use Technology Puzzle

Military agencies are not the only ones building advanced AI. Almost every mathematical breakthrough or machine learning model has "dual-use" potential—serving both civilian and military aims.

Examples in Practice

- Facial Recognition: Once developed for social media, now used for targeting or surveillance.

- Reinforcement Learning Algorithms: Training robots to compete in video games translates directly to controlling drone flocks.

This blurry line creates real-world controversies:

- Should research labs ban or gatekeep data sets and code that could be weaponized?

- Are tech giants responsible for downstream uses of their general-purpose AI?

Policymakers and scientists must strike a balance: incentivizing innovation while preventing misuse—an ongoing conundrum with no easy answers.

Toward Future-Proof Governance: Policy Recommendations

How might the world move toward more robust, fair regulation for autonomous weapons? Leading thinktanks and law scholars converge on several actionable recommendations:

- Negotiate a Binding International Treaty: Similar to protocols outlawing blinding laser weapons, a treaty focusing on autonomy limits, human control, and accountability could set standards ahead of large-scale adoption.

- Update National Laws: Legislators should clarify laws around war crimes liability, AI explainability, and export controls for dual-use technologies.

- Strengthen Transparency: Regular reporting, cross-border inspections, and shared best practices can build trust and reduce arms race dynamics.

- Invest in AI Ethics Research: Support interdisciplinary labs studying the social, psychological, and legal implications of algorithmic battlefield decisions.

Notable Progress

The International Committee of the Red Cross (ICRC) and Stimson Center propose enforcement of “predictability and reliability” standards in all deployed weapon systems. The EU has increased funding for “AI Ethics by Design” projects, aiming to integrate human-centered values from the earliest design stages.

Rethinking Warfare and Responsibility

Autonomous weapons represent both the technological frontier and the ethical minefield of modern warfare. While machines can increase speed, precision, and endurance, they simultaneously stretch the limits of human moral reasoning, legal responsibility, and strategic stability. The fact that so many stakeholders cannot agree reflects the enormity—and urgency—of the issue.

As societies confront the accelerating pace of AI-augmented conflict, one truth stands out: technology may transform battlefields, but it should never override the conscience that underpins human society. Ensuring that future regulation reflects clarity, accountability, and a respect for human dignity is not merely desirable; it’s imperative for the decades ahead.

Rate the Post

User Reviews

Popular Posts