Defensive Coding Tips to Prevent Buffer Overflow Vulnerabilities

15 min read Proven defensive coding techniques to effectively mitigate buffer overflow vulnerabilities in software development. (0 Reviews)

Defensive Coding Tips to Prevent Buffer Overflow Vulnerabilities

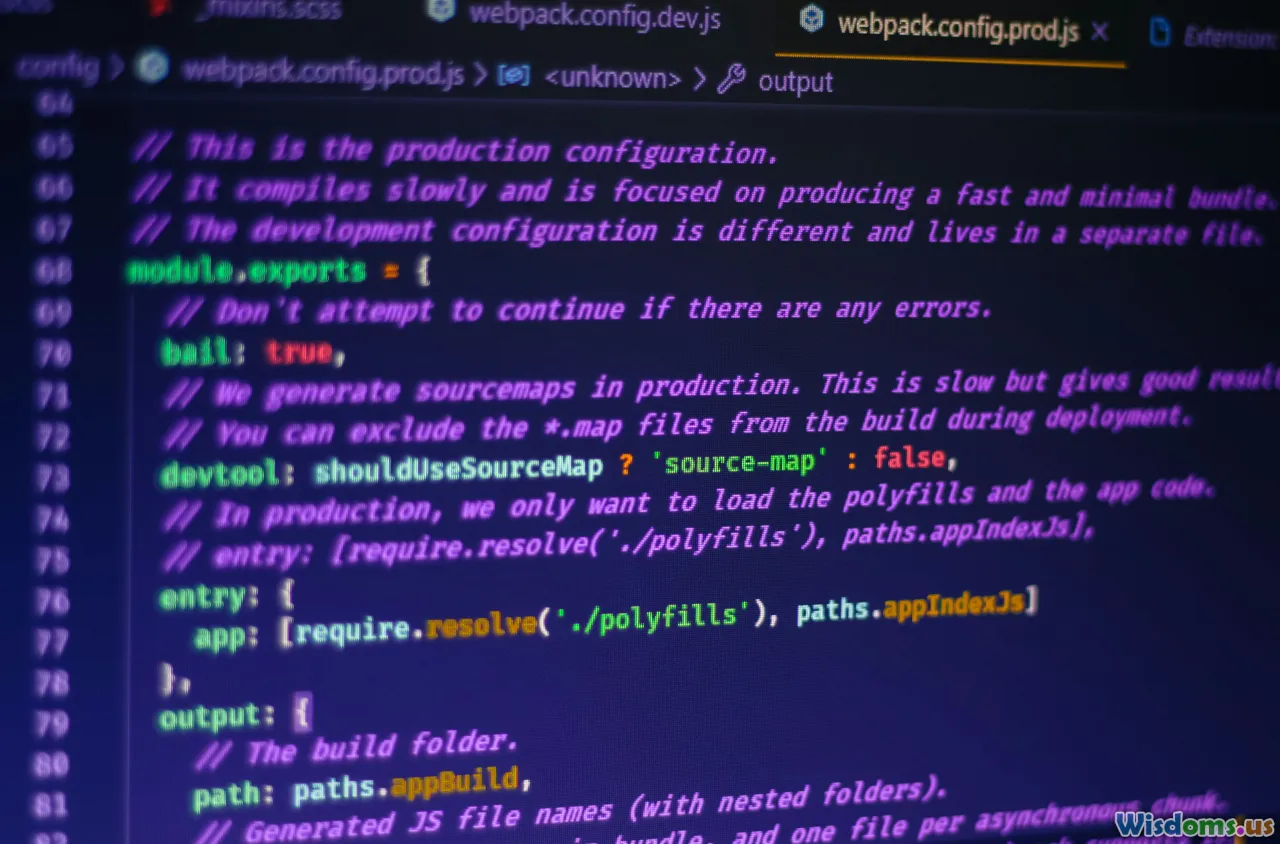

In the complex world of software development, even a single careless coding decision can have devastating consequences. Few programming flaws have wreaked as much havoc as buffer overflows—a class of vulnerabilities responsible for countless security breaches, privilege escalations, and system crashes over the decades. They lurk most often in native code written in languages like C and C++, but threats exist in many contexts. This article serves as a robust guide for developers who want to prevent buffer overflows using disciplined, defensive coding practices.

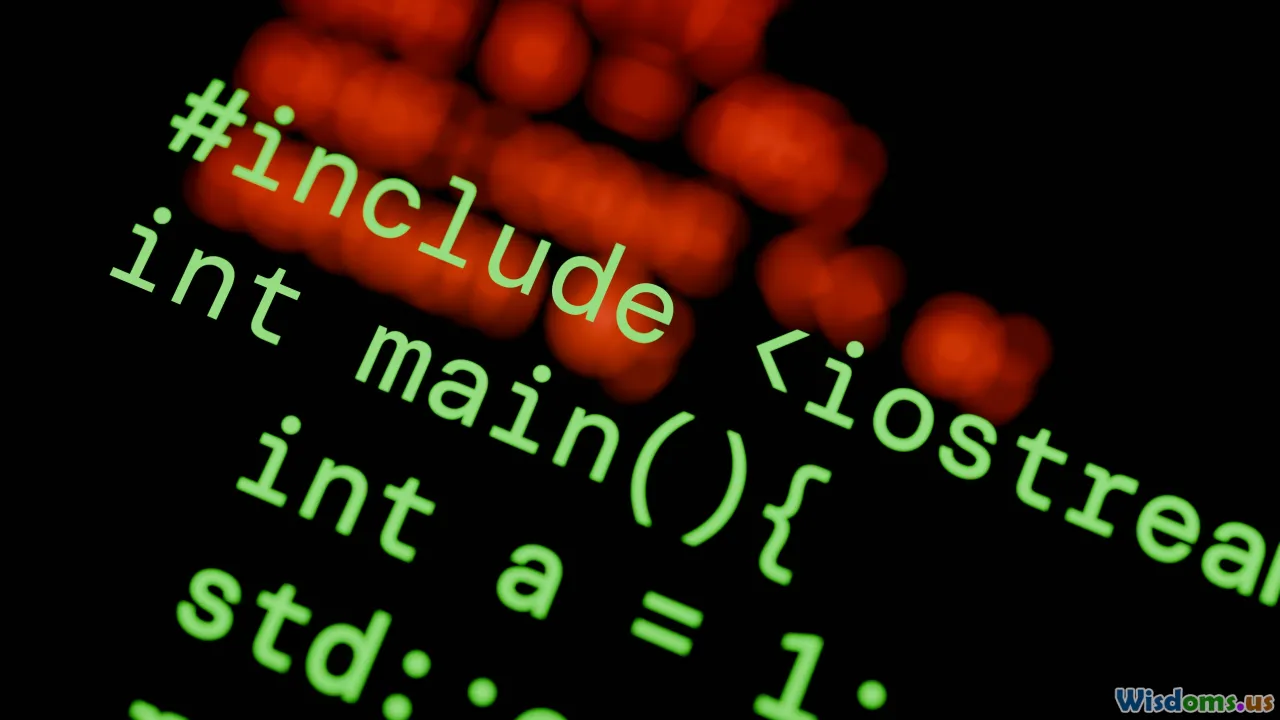

Know Your Enemy: What Are Buffer Overflows?

At its core, a buffer overflow occurs when software writes more data to a memory buffer than it was designed to hold. Remember that in many programming environments—especially those without automatic bounds checking—such overflows can corrupt adjacent memory, alter the execution path, or provide attackers with footholds for code injection. Historically, high-profile worms like Code Red, Slammer, and even Microsoft’s multiple Windows vulnerabilities have traced their roots to a simple programming mistake related to buffer management.

A Classic Example

void unsafe_function(char *str) {

char buffer[16];

strcpy(buffer, str); // Danger! No bounds checking

}

Here, if str is longer than 16 bytes, the remaining data will overwrite memory beyond buffer, leading to unpredictable (and possibly dangerous) behavior.

Understanding how buffer overflows manifest is the first layer of a strong defensive stance.

Choose Safe Programming Languages and Libraries

Not every language makes buffer overflows easy to trigger. When possible, favor languages with strong memory safety guarantees:

- Python, Java, Rust, Go: These modern languages provide automatic bounds checking or memory safety features.

- Rust deserves special mention for offering both performance and memory safety through its ownership and borrowing model. As of 2024, it's being increasingly adopted for security-critical codebases.

- When working with C or C++, strictly use standard libraries that emphasize safety, such as

strncpy,snprintf, or safe wrappers from the C11 Annex K bounds-checked library extensions (strcpy_s,strncpy_s).

Real-World Adoption

Mozilla’s rewrite of critical Firefox components in Rust has drastically reduced memory safety bugs. Similarly, Google's Chrome project is turning to "memory-safe" languages for new security-critical modules.

Validate All Inputs: Never Trust the Source

Unchecked user input is the main entry point for buffer overflows. Always:

- Validate input lengths and formats before copying or processing data.

- For network or file I/O, always use the explicit length of data received.

- Use regular expressions or state machines to enforce input structure, especially for protocol or file parsers.

Example: Safe Input Handling in C

#define MAX_NAME_LEN 32

char name[MAX_NAME_LEN];

if (fgets(name, sizeof(name), stdin)) {

name[strcspn(name, "\n")] = 0; // Strip newline

}

Here, fgets prevents overruns and the length is checked explicitly.

Automate the Checks

Automated static analysis tools (e.g., Coverity, CodeQL) catch input validation lapses early in the pipeline, reducing the window for human error.

Prefer Size-Limited Functions and Modern APIs

Classic C functions like strcpy, scanf, and gets are notorious for their lack of built-in bounds checking. Always replace them with their safer, size-bound variants:

- Use

strncpy,strncat,snprintfinstead ofstrcpy,strcat,sprintf. - Prefer

fgetsovergets(which has been removed from modern C standards entirely). - In C11 and above, use

strcpy_s,strncpy_sfrom Annex K.

Example: Safer String Copy

char dest[20];

strncpy(dest, src, sizeof(dest) - 1);

dest[sizeof(dest) - 1] = '\0';

Here, strncpy ensures the destination won't overflow. For even more safety, craftsman developers explicitly null-terminate the target buffer after copy.

Enforce Strict Bounds-Checking Logic

Buffer overflows often result from off-by-one mistakes and inconsistent buffer size calculations. Adopt these strategies:

- Clearly define limits using

#defineorconstvalues. - Consistently use

sizeof()and macros to calculate buffer sizes rather than magic numbers. - Enforce bounds in loops, copy operations, and when managing arrays.

Preventing Off-By-One Errors

Consider the classic off-by-one bug:

for (i = 0; i <= MAX_LEN; ++i) { ... } // Wrong: should be < instead of <=

This common error gives an attacker a one-byte window into neighboring memory, which is sometimes enough for an exploit. Compiling with warnings enabled (gcc -Wall) can help flag these lapses.

Leverage Compiler and OS Protections

Hardware and system-level security features are an additional layer of defense—even if you’ve written perfect code. Always enable available mitigations:

- Stack canaries (detects overwrites of return pointers)

- Data Execution Prevention (DEP/NX) (prevents code execution from data regions)

- Address Space Layout Randomization (ASLR) (randomizes process memory layout)

Enabling Protections

On modern compilers:

- Use

-fstack-protector-strongfor GCC/Clang - Enable

-D_FORTIFY_SOURCE=2when possible - Compile with

-pieand-fPIEfor ASLR

Operating systems like Linux and Windows provide system-level support for these features, but your code must be compiled and linked accordingly to benefit from these defenses.

Audit and Test Rigorously

No defense is strong if it's untested. Defensive coders embed buffer overflow testing into their workflow at multiple stages:

- Code reviews: Regular peer reviews catch unsafe patterns early.

- Static analysis: Tools like Coverity, Clang Static Analyzer, and CodeQL scan for vulnerable code.

- Fuzzing: Automated tools (like AFL, libFuzzer) inject random or malformed data to stress test code paths.

- Penetration testing: Security experts simulate real attacks to verify the robustness of defenses.

Case Study: Heartbleed Bug

The infamous Heartbleed vulnerability in OpenSSL was essentially a bounds-checking error in a heartbeat extension. Rigorous fuzz testing and audits would have caught the missing size check. Today, leading open-source projects such as Chromium and the Linux kernel maintain dedicated security teams to run continuous fuzzing and peer review.

Defensive Coding Patterns: Principles in Practice

It’s not just about individual fixes, but habits that pervade your coding style:

1. Prefer Encapsulation

Wrap buffer manipulations in functions that expose safe interfaces.

void set_username(char *dest, size_t dest_size, const char *username) {

strncpy(dest, username, dest_size - 1);

dest[dest_size - 1] = '\0';

}

2. Minimize Direct Buffer Manipulation

Abstract unsafe operations behind safer data structures (like STL containers in C++ or safe string APIs).

3. Assume Worst-Case Data

Always code defensively—never assume input is well-formed or ‘just right’ in length.

4. Consistent Code Reviews and Static Checking

Make it policy to require static analysis or at least thorough peer review for all code changes.

5. Document Buffer Sizes Clearly

Ambiguity is an enemy—write clear comments describing the intent, size, and limit of every buffer.

Practical Real-World Missteps—and How to Avoid Them

Case 1: Statically Sized Network Buffers

Many network-facing applications allocate fixed-size buffers for protocol processing. If an attacker sends a multi-byte payload exceeding expectations, and your code doesn’t enforce lengths, results range from subtle data corruptions to remote code execution.

Fix: Always parse incoming packet headers first to get size fields—then enforce sanity limits both on receipt and on processing.

Case 2: Environment Variables and Command-Line Arguments

If you copy these into small local buffers without checks, attackers can exploit your program at launch.

Fix: Use robust argument parsing utilities that enforce size and structure rather than rolling your own routines.

Embedded and IoT Coding: Special Concerns

Resource-constrained programming in embedded devices and IoT amplifies buffer overflow risks. Not only do developers reach for C/C++ for performance or size savings, but embedded runtimes may lack hardware memory protections common in desktops and servers.

Actionable Advice

- Use static analysis—tools like PC-lint, Cppcheck, or Splint specialize in finding low-level C bugs.

- Carefully review every external input path (e.g., radio, Bluetooth, serial ports) for size and type side-channels.

- Consider a defense-in-depth approach: deploy watchdog timers, use memory protection units (MPUs), and fail safely in case of error.

Cultivate a Security-First Culture

Buffer overflow prevention isn’t just a technical discipline; it’s a team mindset. Organizations that perform well:

- Make secure coding part of onboarding and routine training.

- Share lessons and incidents: when a bug is found, turn it into a teaching moment—rather than blame.

- Invest in ongoing education: keep teams current on vulnerabilities, exploitation techniques, and defenses.

- Reward careful, defensive coding practices in performance reviews.

What Lies Ahead: The Evolution of Safe Software

As programming languages and development frameworks continue to evolve, we’ll see safer software by design become a reality. Hardware manufacturers push for memory tagging and runtime safety checks at the silicon level. Compilers grow smarter—Clang and GCC already flag potentially hazardous patterns with new diagnostic features. Meanwhile, security-first languages like Rust inspire new approaches to systems programming.

There is still no field-tested panacea; buffer overflows will continue to challenge coders for decades. By following the best practices above and committing to a culture of persistent vigilance, you can ensure your code never becomes another headline in the history of software disasters. Defensive coding is not only a technical shield—it’s an investment in your reputation, users, and the future of secure technology.

Rate the Post

User Reviews

Popular Posts