The Rise of Automated Hypothesis Testing Tools Opportunity or Risk

8 min read Explore how automated hypothesis testing tools are reshaping decision-making, balancing innovation with potential risks. (0 Reviews)

The Rise of Automated Hypothesis Testing Tools: Opportunity or Risk?

Introduction

In the dynamic world of innovation and research, the phrase "testing one’s hypothesis" is foundational—a crucial step toward validating ideas and shaping strategies. Recently, a new player has emerged on this landscape: automated hypothesis testing tools. These platforms promise to streamline the traditional, often time-consuming process of hypothesis testing, enabling quicker insights and more data-driven decisions. But with great power comes great responsibility. Are these tools unequivocally a boon, or do they carry inherent risks that could undercut their promise? In this article, we delve deeply into the rise of automated hypothesis testing tools, exploring the opportunities they unlock, the accompanying risks, and what the future might hold.

Understanding Automated Hypothesis Testing Tools

Automated hypothesis testing tools utilize advanced algorithms, machine learning, and statistical automation to evaluate hypotheses without requiring extensive manual input. Unlike classical statistical testing where analysts must painstakingly prepare data, select tests, and interpret results, automated tools aim to simplify these steps.

How Do They Work?

These platforms typically ingest your dataset and an explicit or implicit hypothesis, then proceed to:

- Apply a suite of relevant statistical tests, sometimes suggesting the best test based on data properties.

- Automatically calculate metrics such as p-values, confidence intervals, and effect sizes.

- Visualize results for easier interpretation.

- Provide guidance or even automated recommendations on whether to reject or fail to reject the null hypothesis.

For example, companies like DataRobot and StatSig offer hypothesis testing as part of larger AI and experimentation suites, enabling non-experts to run complex analyses efficiently.

Opportunities: Why Automated Hypothesis Testing Matters

1. Speed and Efficiency

Traditional hypothesis testing requires domain knowledge, careful data cleaning, and precise test selection—all labor-intensive processes. Automated tools significantly reduce the time from data collection to decision, facilitating rapid experimentation especially useful in fast-paced industries such as e-commerce and software development.

Case in point: A/B testing platforms like Optimizely, when paired with automated hypothesis tools, accelerate product iteration cycles and marketing campaign adjustments.

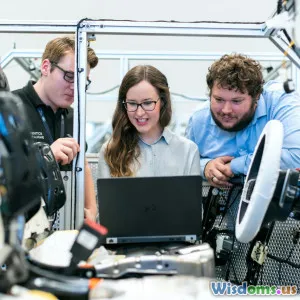

2. Democratization of Data Science

By abstracting the complexity of statistical methods, these tools empower professionals without advanced statistical training to make informed decisions. For example, marketers or product managers can validate customer assumptions without waiting for a dedicated data team

3. Consistency and Reproducibility

Human error, such as incorrect test choice or data mishandling, can invalidate hypothesis results. Automation enforces standardized workflows that reduce subjectivity and promote reproducibility—a cornerstone of scientific integrity.

4. Cost Reduction

Smaller organizations lacking in-house statistical expertise can leverage automated tools as affordable alternatives to expensive consultancy or hiring specialists. This lowers the barrier to data-driven innovation worldwide.

Risks and Challenges

1. Overreliance and Oversimplification

While these tools simplify testing, they may also encourage uncritical reliance. Statistical testing requires context, understanding assumptions (like normality or independence), and interpreting nuances that an automated system might overlook.

E.g., blindly accepting p-values without considering study design or data quality can lead to false discoveries.

2. False Sense of Confidence

Automation might mask complexities, leading decision-makers to place undue trust in results. Overconfidence in automated outputs can propagate flawed strategies or faulty science.

A study published in Nature Communications (2022) highlighted the rising risks of reproducibility failures due to automated analytics tools applied without rigorous validation.

3. Data Quality and Bias

These tools rely on input data integrity. Poor-quality or biased data can yield misleading conclusions. Automation cannot rectify inherent data flaws or correct for sampling biases.

4. Ethical Concerns

Automated testing in sensitive areas such as healthcare or criminal justice can inadvertently entrench biases if not carefully monitored. Algorithms might amplify prejudiced decision-making under the guise of objective science.

5. Lack of Transparency

Some tools operate as "black boxes" with proprietary algorithms, making it difficult to audit or understand how conclusions are reached. This opacity can hinder trust and real-world deployment.

Real-World Examples Illustrating the Balance

Success Story: Airbnb

Airbnb has harnessed automated hypothesis testing to optimize their user experience through rapid A/B experimentation. Automated stats quickly validate or discount interface changes, improving customer conversion and satisfaction while minimizing manual labor.

Quoting Airbnb’s Experimentation Lead, "Automation lets us run thousands of tests yearly without sacrificing statistical rigor. It’s revolutionizing product development."

Cautionary Tale: Early Covid-19 Research

During the initial phase of the COVID-19 pandemic, rushed automated statistical analyses fueled premature conclusions in some studies, raising warnings about automated tools lacking contextual peer review.

Dr. Staton, epidemiologist, reflected: "Automation accelerates research—but we must balance speed with caution, or risk misinformation."

Navigating the Future: Best Practices and Recommendations

To maximize benefits and curb risks of automated hypothesis testing tools, organizations should:

- Combine automation with expert oversight—interpret automated outputs using domain knowledge.

- Implement checks for data quality and bias detection before analysis.

- Demand transparency from tool providers regarding algorithms used.

- Use automation to augment—not replace—the scientific method.

- Train stakeholders on understanding statistical outputs beyond surface-level metrics.

Emerging advancements in explainable AI (XAI) and model interpretability may soon address some issues around transparency, helping users trust and validate automated findings.

Conclusion

The rise of automated hypothesis testing tools marks a transformative epoch for experimentation and decision-making across fields. Their ability to streamline complex analysis, democratize data science, and reduce costs heralds significant opportunities. However, this power is coupled with dangers related to overreliance, data quality, and ethical considerations.

Ultimately, whether these tools become unparalleled assets or problematic pitfalls depends on human stewardship—combining the speed of automation with critical wisdom and context. By doing so, organizations can harness automated hypothesis testing not just as a novelty, but as a trustworthy partner in the quest for knowledge and innovation.

As these technologies mature, the balance of opportunity and risk will define the future landscape of research, business, and beyond.

Rate the Post

User Reviews

Popular Posts