Why Number Theory Matters In Cryptography Today

16 min read Explore how number theory underpins modern cryptography and secures digital communication in today's cyber-driven world. (0 Reviews)

Why Number Theory Matters In Cryptography Today

In an era where our personal privacy and digital transactions increasingly depend on encrypted connections, the unassuming branch of mathematics called number theory quietly sits at the heart of it all. Number theory, once mainly concerning the mystical properties of primes and the peculiarities of divisibility, is now indispensable to the protocols that shield governments, businesses, and individuals from cyber threats. But why has this centuries-old discipline become so central, and how do its principles shape the modern landscape of cryptography?

The Foundation: Primes and Public-Key Systems

There is a profound simplicity behind one of the internet’s most vital guards: prime numbers. Prime numbers — those integers greater than 1 with no positive divisors other than 1 and themselves — form the seeds of cryptosystems that undergird secure online transactions, digital signatures, and identity validation.

RSA Encryption, invented in the late 1970s, provided a breakthrough: encryption that didn't require a shared secret upfront, perfect for open digital communications. RSA relies on the difficulty of factoring the product of two large primes, a task still considered extremely hard for classical computers as the numbers grow larger.

Example: How RSA Uses Number Theory

- Key Generation: Select two large random primes, ( p ) and ( q ), and compute their product ( n = p \times q ) — the modulus.

- Public and Private Keys: The public key includes ( n ) and another number called the public exponent. The private key relates to the difficult-to-compute value derived from ( p ) and ( q ).

- Security? Breaking RSA reduces to efficiently factoring ( n ) back into ( p ) and ( q ), a problem with super-polynomial complexity for classical methods if the primes are chosen correctly.

Thus, number theory is not just abstract — it’s the engine that makes public-key cryptography secure and usable by billions.

Modular Arithmetic: The Language of Secure Algorithms

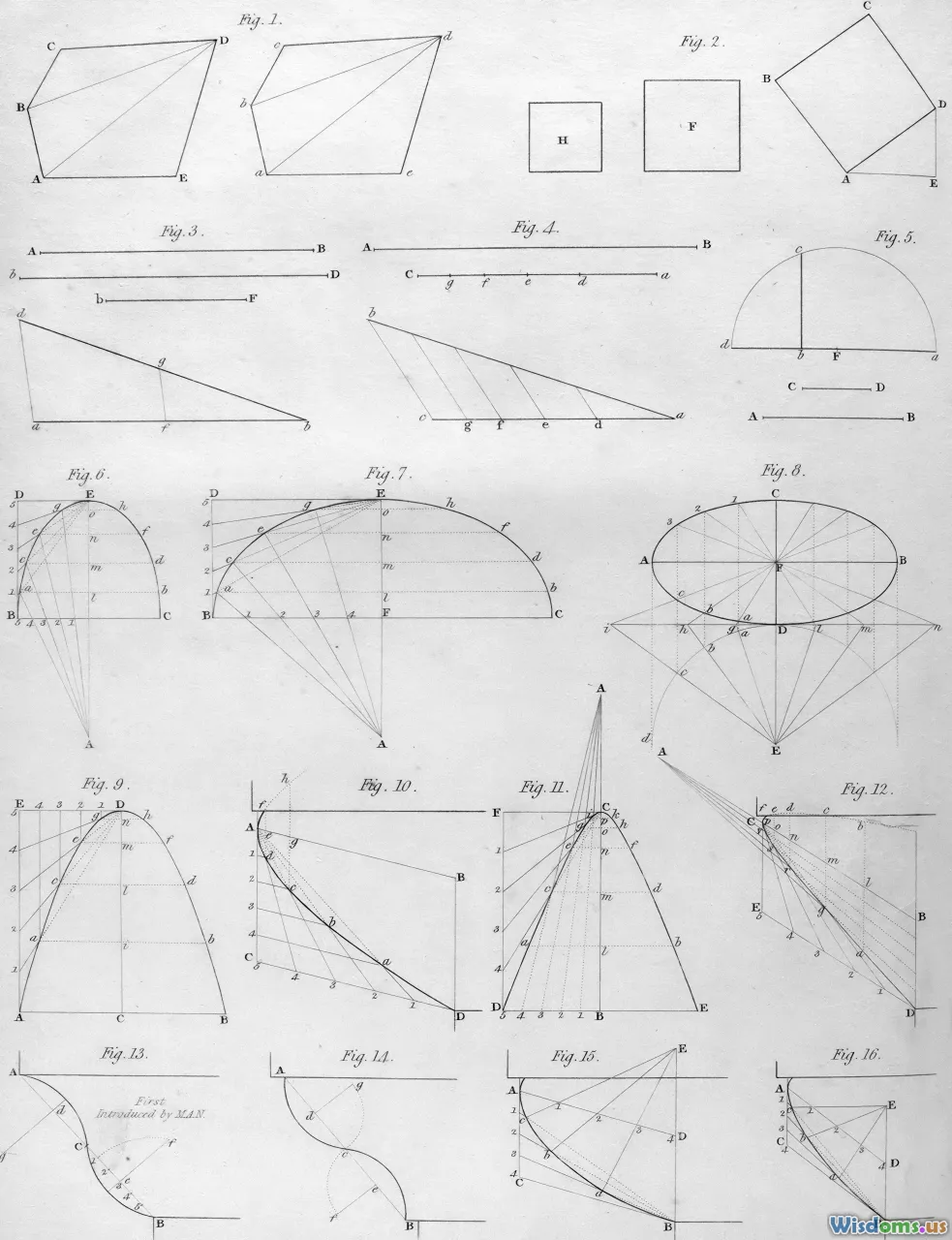

Many cryptographic algorithms extensively use modular arithmetic — calculations where numbers wrap around after reaching a certain value (the modulus), much like a clock.

Elliptic Curve Cryptography (ECC), now widespread in mobile devices and secure-sharing protocols, relies on point addition rules built out of modular arithmetic over finite fields.

- For AES encryption, although not considered public-key crypto, plaintext and keys are scrambled through fixed-length block operations influenced by modular addition and multiplication underpinned by finite fields, ensuring diffusion and confusion (two pillars of cryptographic design).

Example: Diffie-Hellman Key Exchange

The classic Diffie-Hellman Protocol lets two parties establish a shared secret over a public channel. Here’s how modular arithmetic makes it work:

- Agree on a large prime ( p ) and generator ( g ).

- Each party selects a secret number and sends ( g^a \mod p ) or ( g^b \mod p ) to the other.

- Thanks to the properties of modular exponentiation, both arrive independently at their shared secret: ( (g^b)^a \mod p = (g^a)^b \mod p ).

Crucially, while it’s easy to compute powers modulo large primes, reversing the process (finding discrete logarithms) remains computationally infeasible, granting security against interception.

The Prime Number Race: Generating, Testing, and Using Large Primes

Prime numbers aren’t just taken from a list — cryptographic systems require the active, on-the-fly generation of primes dozens to thousands of bits long.

Generating Huge Primes

- Probabilistic Primality Tests: Tests like the Miller-Rabin test rapidly tell if a candidate is probably prime. When the number passes a sufficient number of iterations, the odds of a false prime are vanishingly small.

- Safe Primes: Some applications prefer primes ( p ) such that ( (p-1)/2 ) is also prime, minimizing the risk of subtle attacks.

Fact: According to the Prime Number Theorem, as numbers increase in size, the density of primes thins out: roughly one in every ( n / \ln(n) ) integers near ( n ) will be prime. Thanks to quick selection and testing algorithms, we can still find cryptographically suitable primes efficiently, even for keys with 2048 bits or more.

The Challenges

Generating strong, random primes is not trivial — hardware flaws and software bugs have undermined widely-deployed cryptosystems. The infamous Debian OpenSSL debacle in 2006-2008 resulted from predictable random number generation, enabling attackers to reconstruct supposedly secret RSA keys.

Factoring, Discrete Logarithms, and Hard Problems

Much of cryptography rests on certain problems being mathematically (and practically) hard — tractable for honest users, infeasible for adversaries.

RSA and Factoring

RSA keys depend on the assumption that factoring a large semiprime (a product of two primes) is hard. While mathematicians have developed sophisticated algorithms like the General Number Field Sieve (GNFS), breaking modern key sizes (2048 bits and up) would still take astronomical computing power.

Discrete Logarithms

The difficulty of reversing modular exponentiation (that is, finding ( a ) given ( g^a \mod p )) protects both Diffie-Hellman key exchanges and elliptic curve cryptosystems.

- Finite Field Discrete Logs: For groups based on integers modulo a prime, the discrete log problem is well-studied and known to be tough at large sizes.

- Elliptic Curve Discrete Logs: For group operations on elliptic curves, the best-known attacks fare worse than for ordinary finite fields, meaning smaller key sizes can achieve high security.

Why Do These Hardness Assumptions Matter?

Improvements in factoring or discrete log algorithms — or new breakthroughs in number theory — could squeeze the timeline before cryptosystems need to upgrade or retire. The ongoing search for new algorithms keeps the field closely tied to number theory research.

Elliptic Curves: Complex Number Theory, Practical Protection

Elliptic Curve Cryptography (ECC) embodies the modern fusion of deep number theory and practical cryptography. Rather than plain arithmetic with big primes, ECC employs the more intricate group structure of points on a curve defined by an equation like ( y^2 = x^3 + ax + b ) over a finite field.

Practical implication: For comparable strength, ECC uses dramatically smaller keys and signatures — a 256-bit ECC key is roughly equivalent to a 3072-bit RSA key. This makes ECC especially attractive for resource-constrained devices: smartphones, embedded IoT devices, and even satellites.

Real-world Usage

- Signal, the secure messaging app, uses ECC for its private and public key operations.

- Most modern web browsers use TLS with the Curve25519 scheme, offering both performance and resistance to certain classes of attacks.

Insights: Number Theory’s Subtleties Still Matter

Recent cryptanalytic results expose vulnerabilities when specific, poorly chosen curves are used (such as the Dual_EC_DRBG case, where curve characteristics were manipulated). These incidents further reveal that sophisticated number-theoretic analysis remains essential, both to select safe parameters and to avoid falling prey to subtle, exploitable mathematical properties.

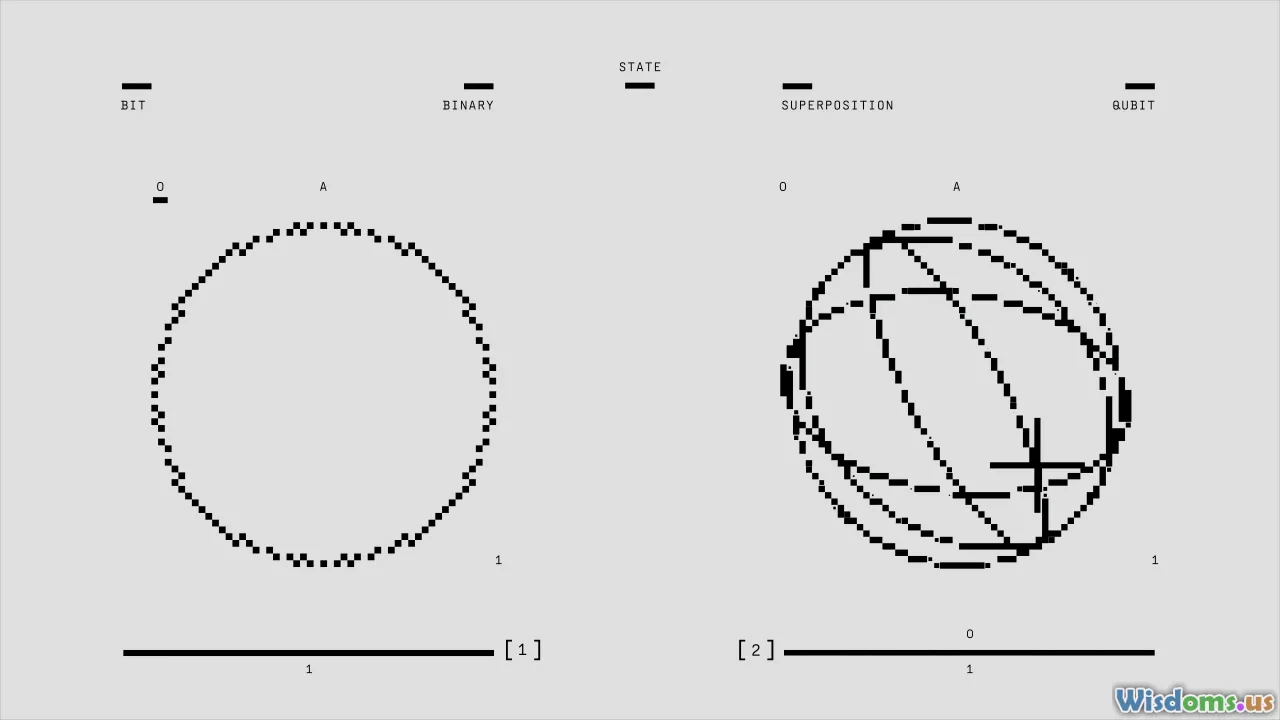

Lattices and the Post-Quantum Challenge

The dawn of quantum computing threatens to upend number-theory-based cryptosystems; integer factoring and discrete logs could become easy thanks to Shor’s algorithm. But number theory remains central to the next generation of cryptography — just in new forms.

Lattice-Based Cryptography

Lattices are multi-dimensional grids of points characterized by sets of linearly independent vectors. Hard lattice problems such as SVP (Shortest Vector Problem) and LWE (Learning With Errors) underpin many post-quantum approaches and rely on mathematical challenges vastly different, but still rooted in algebraic number theory.

- NTRU uses structured lattices from polynomial rings — a sort of cross-breed between abstract algebra and number theory.

- FrodoKEM proposes key exchange based on standard lattices, exploiting the difficulty of certain approximation problems in high dimensions.

Why Number Theory Persists Post-Quantum

While primes and modular exponentiation might not last, number theory’s fabric adapts: research into structured (or unstructured) matrices mod primes and properties of high-dimensional discrete spaces reveals just how essential this realm remains. Even quantum-secure cryptographic constructs must contend with subtle number-theoretic properties and their interactions with new algorithms.

Hash Functions and Randomness: Where Number Theory Hides in Plain Sight

At another level, number theory’s influence continues inside hash functions and random number generators.

Randomness Matters

If encryption keys aren’t truly random, then brute force and side-channel attacks become practical. For high-quality randomness, cryptosystems sometimes harvest entropy from hardware sources and mix it using number-theoretic transformations (like modular multiplication or the properties of primitive roots).

Secure Hashes: Mixing via Number Theory

Some hash functions, especially those used for digital signatures (e.g., SHA-2, SHA-3), use mixing and non-linear modular arithmetic, permutations, and field operations to ensure that outputs appear random and independent of input relationships. The strength of hash-based cryptography (like Merkle trees in blockchains) often relies on number-theoretic insights for provable resistance against collisions and preimage attacks.

Attacks from Number Theory: Understanding and Preventing Exploits

As the mathematical underpinnings go, so do the weaknesses. Critical cryptographic failures are often traced to number-theoretic properties that were overlooked or misunderstood.

- RSA Small Public Exponent Attack: When the public exponent is too small and messages aren’t randomly padded, attackers can uncover plaintext from ciphertext using simple algebraic attacks.

- Primality Tests Gone Wrong: Flawed or insufficient probabilistic primality tests have seeded weak key generation, allowing attackers to factor moduli that should have been unbreakable.

- Curve Parameter Manipulation: As seen in the disastrous Dual_EC_DRBG incident, cryptographic backdoors can be installed by choosing random-looking but specifically vulnerable curve parameters, exploiting subtle properties of elliptic curves.

Best practice now requires the cryptographic community to publicly review and vet all mathematical underpinnings — a practical acknowledgment of how much number theory dictates both strength and vulnerability.

The Ongoing Dance: Mathematicians, Engineers, and Crypto Safeguards

Cryptography continues to benefit from — and challenge — the boundaries of number theory. Breakthroughs in fields like algebraic geometry or the study of function fields quickly feed into proposals for new cryptographic protocols.

- The development and refinement of advanced primality tests (such as the AKS primality test) enable even more efficient and reliable key generation.

- Deeper understandings of the structure and symmetries of algebraic objects have a direct impact on the creation of zero-knowledge proofs, multi-signature schemes, and secure multi-party computations.

The result is a vibrant feedback loop: cryptographers demand new hardness assumptions; number theorists seek proofs and clarifications; security engineers push boundaries in real-world applications.

Number theory is no longer isolated in ivory towers — it sits at the core of the world’s most sensitive infrastructure. As threats evolve and quantum computing beckons, the discipline’s rich landscape continues to provide both challenges and shields. The next time you send a message, cast a vote, or transfer funds online, you can thank the visionaries in number theory for keeping your information locked tight — at least, for now.

Rate the Post

User Reviews

Popular Posts