Future Trends in Measuring Consciousness A Prediction Based Analysis

9 min read Explore future advancements in measuring consciousness with prediction-based methods redefining neuroscience and AI integration. (0 Reviews)

Future Trends in Measuring Consciousness: A Prediction-Based Analysis

Introduction

What does it truly mean to be conscious? For centuries, philosophers and scientists alike have grappled with this question, often describing consciousness as the subjective experience or awareness of the self and surroundings. Despite tremendous progress in neuroscience, measuring consciousness remains one of the most complex challenges in science. Traditional methods have relied largely on behavioral indicators and neural correlates, but these approaches can be indirect or limited.

In recent years, a new frontier has emerged: prediction-based analysis. This method leverages predictive coding, machine learning, and computational neuroscience theories to gain a more objective, quantifiable insight into conscious states. This article explores future trends in measuring consciousness through the lens of prediction-based frameworks, highlighting technological innovations, theoretical advancements, and practical applications shaping the research landscape.

The Traditional Challenges in Measuring Consciousness

Limitations of Behavioral and Neural Correlate Approaches

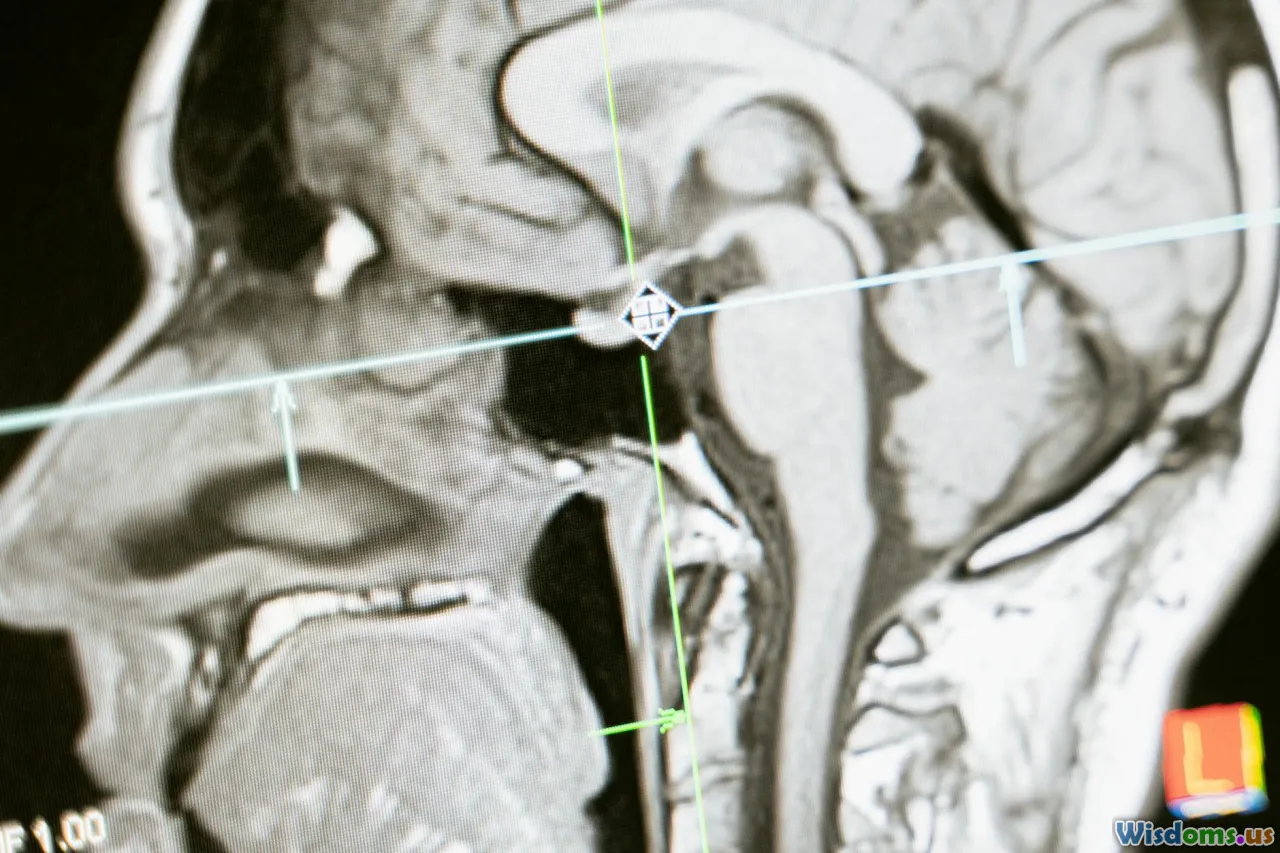

Historically, researchers have used behavioral responses — such as facial expressions, language, or responses to stimuli — to infer consciousness. In disorders like vegetative states or minimally conscious states, behavioral observations remain crucial. Meanwhile, functional neuroimaging techniques like fMRI or EEG have identified neural correlates, specific brain activity patterns associated with conscious experience.

However, these methods face several obstacles:

-

Subjectivity and Ambiguity: Behavioral responses can be misinterpreted, and neural activity doesn’t always equate to conscious experience.

-

Lack of Universality: Neural signatures differ between individuals and states, complicating standardization.

-

Temporal Limitations: Consciousness is dynamic, but traditional methods offer static or delayed measures.

For example, the Coma Recovery Scale-Revised (CRS-R), a popular clinical tool, depends heavily on observable responses that may not fully capture covert consciousness, especially in patients unable to communicate.

Prediction-Based Theories as a New Paradigm

The Foundations of Predictive Coding

Prediction-based analysis stems from predictive coding theories in neuroscience, which suggest that the brain perpetually generates hypotheses about incoming sensory information and minimizes errors between expectations and actual inputs. Conscious experience then becomes an active inference process where predictions and errors guide perception, attention, and awareness.

Karl Friston, a leading figure in this domain, explains: “The brain is a prediction machine aiming to reduce surprise.” This framework implies that measuring how the brain predicts and adapts to stimuli can offer a window into consciousness.

How Prediction-Based Measurement Differs

Predictive models quantify the accuracy and dynamics of these brain-generated predictions, using sophisticated computational tools to analyze neural activity. This approach enables:

- Dynamic Tracking: Continuous assessment of prediction errors capturing moment-to-moment changes.

- Objective Metrics: Algorithms that quantify prediction discrepancies offering more standardized indices.

- Individual Variability Insights: Modeling personalized neural responses to stimuli.

Emerging Technologies Advancing Consciousness Measurement

Machine Learning and AI Integration

Machine learning algorithms can decipher complex patterns in brain data (EEG, MEG, fMRI). For instance, recent studies have used deep learning to classify states of consciousness in ICU patients by interpreting raw EEG data with over 90% accuracy — a significant leap from manual assessments.

AI-driven systems can predict the likelihood that a patient is conscious even in the absence of behavioral response, enhancing prognosis and treatment decisions.

Real-time Neurofeedback and Brain-Computer Interfaces (BCI)

Prediction-based models enable the development of real-time neurofeedback systems that adapt based on the individual's brain predictions. BCIs can help patients with disorders of consciousness communicate by decoding their neural prediction patterns, essentially translating brain signals into commands or speech.

For example, recent experiments with locked-in syndrome patients demonstrated successful communication using real-time prediction error monitoring.

Multimodal Fusion of Data

Future trends involve combining diverse data streams such as neural imaging, electrophysiology, physiological monitoring (heart rate variability), and behavioral data into unified predictive frameworks. This fusion enriches interpretation and refines measurement accuracy.

Practical and Ethical Implications

Clinical Applications

Prediction-based measurements could revolutionize diagnosing and managing disorders of consciousness. They offer:

- Reliable detection of covert awareness.

- Personalized treatment strategies based on real-time conscious state monitoring.

- Better assessment of anesthetic depth during surgery, enhancing patient safety.

Philosophical and Ethical Questions

As measurement precision improves, debates emerge on defining consciousness boundaries — for instance, in AI systems or non-human animals. Predictive models may challenge our conceptions of subjective experience and rights associated with consciousness.

We must consider the ethics of interpreting prediction signals, especially when dealing with vulnerable populations or AI entities that mimic conscious behaviors.

Future Directions and Research Frontiers

Deepening Theoretical Understanding

As research progresses, integrating predictive coding with other theories, such as integrated information theory (IIT) and global workspace theory (GWT), may yield hybrid models that better characterize consciousness's multifaceted nature.

Cross-Disciplinary Collaborations

Collaborations between neuroscientists, computer scientists, philosophers, and clinicians will accelerate breakthroughs. The use of big data analytics and high-performance computing will empower these interdisciplinary teams to simulate and analyze consciousness dynamics more effectively.

Personalized Consciousness Analytics

Advancements in wearable neurotechnology will likely enable personalized monitoring of conscious states outside lab settings — a boon for mental health, sleep studies, and cognitive enhancement applications.

Conclusion

Measuring consciousness has long been a daunting puzzle, but prediction-based analysis offers a promising new framework rooted in the brain’s intrinsic nature as a predictive organ. By quantifying how the brain anticipates and reacts to its environment, researchers are unlocking deeper, dynamic insights into conscious experience.

Technological strides — particularly in AI, machine learning, neuroimaging, and brain-computer interfaces — are translating these theories into practical tools with profound clinical, ethical, and societal implications.

The future of measuring consciousness lies in prediction: not only understanding what is perceived but anticipating what it means to be aware itself. For science, medicine, and philosophy, this marks an exciting frontier where technology and human experience converge to illuminate one of the most enigmatic aspects of our existence.

References

- Friston, K. (2010). The free-energy principle: a unified brain theory? Nature Reviews Neuroscience, 11(2), 127-138.

- King, J.-R., & Dehaene, S. (2014). A model of subjective report and objective discrimination as categorical decisions in a vast representational space. Philosophical Transactions of the Royal Society B, 369(1641), 20130204.

- Claassen, J., et al. (2019). Detection of brain activation in unresponsive patients with acute brain injury. New England Journal of Medicine, 380, 2506-2514.

- Mashour, G. A., et al. (2020). Conscious processing and the global neuronal workspace hypothesis. Neuron, 105(5), 776-798.

Rate the Post

User Reviews

Popular Posts