Network Downtime Survival Guide for Rapid Recovery

15 min read Effective strategies and tools to quickly recover from network downtime and minimize business impact. (0 Reviews)

Network Downtime Survival Guide for Rapid Recovery

If your business relies on digital systems, few events are more stress-inducing than network downtime. In our always-connected world, even a brief outage can disrupt operations, erode customer trust, and impact the bottom line. However, not all hope is lost—equipped with the right strategies and preparation, rapid network recovery is within your reach.

This comprehensive guide breaks down actionable steps, real-world examples, and expert analysis to help your organization bounce back fast when the network goes dark.

Understanding Network Downtime and Its Impact

Before diving into recovery tactics, it helps to grasp just how severely network downtime can affect an organization.

Network downtime occurs whenever systems lose connectivity, ranging from a few seconds of lost Wi-Fi to hours- or days-long total data center outages. Gartner has estimated the average cost of IT downtime at $5,600 per minute (about $300,000 per hour), with some enterprises facing much higher stakes.

Common Business Consequences Include:

- Productivity Loss: Employees cannot access essential resources, halting workflows and communication.

- Revenue Impact: E-commerce sites and payment systems stop operating, causing direct sales losses. Amazon once lost $100 million in a 12-hour outage.

- Customer Satisfaction Slump: Service interruptions erode trust and can drive customers to competitors.

- Compliance & Security Risks: SLA violations and security lapses may trigger fines or legal issues.

Understanding these risks underlines why downtime events merit an urgent, organized response.

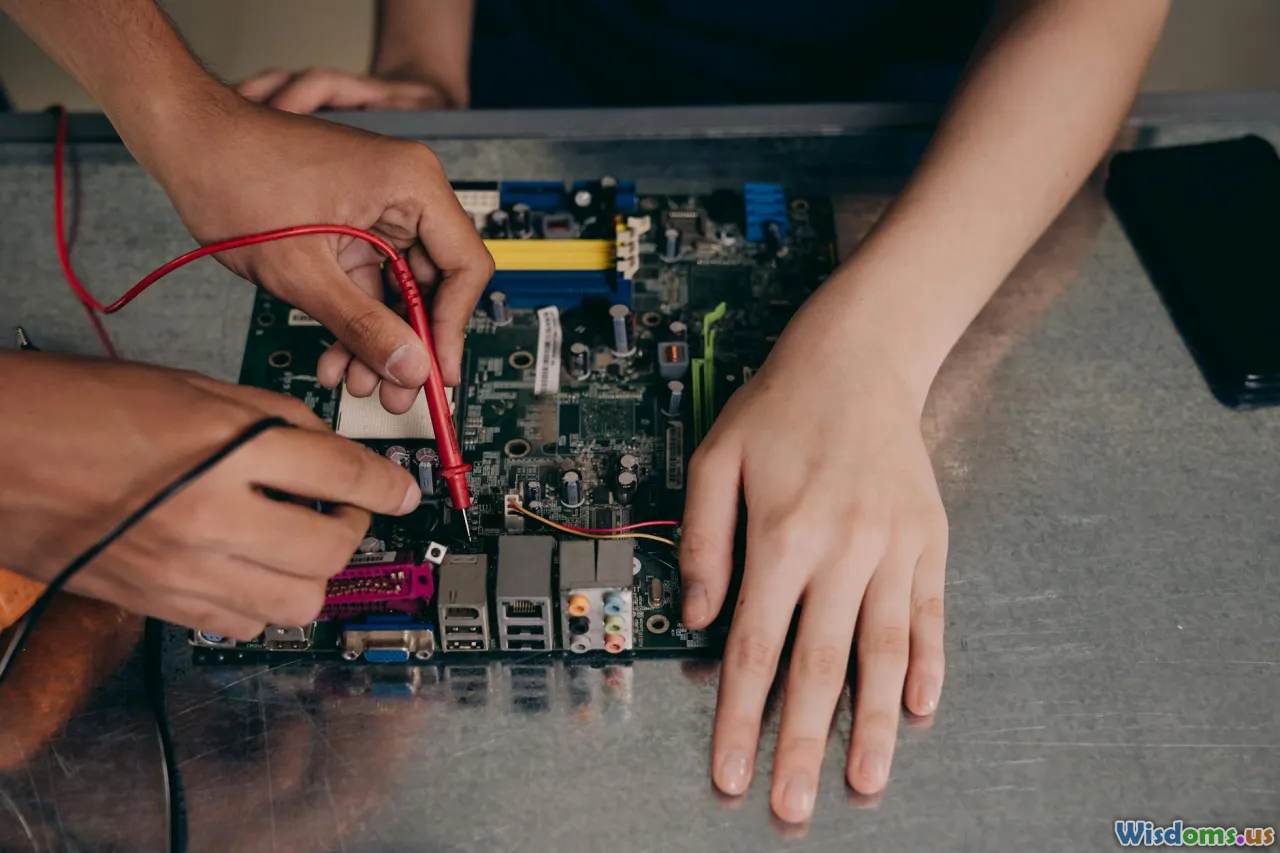

Pinpointing Root Causes: Prevention Starts with Detection

Not all network outages start the same way. Rapid recovery hinges on accurately diagnosing the source as quickly as possible. Common culprits include:

- Hardware Failures: Aging or defective switches, routers, or cabling.

- Software Updates Gone Wrong: Poorly tested patches can cause compatibility issues.

- Cyber Attacks: DDoS attacks, ransomware, or security breaches.

- Human Error: Accidental network misconfigurations or unplugged cables.

- Utility Failures: Power outages or ISP disruptions.

Tools for Early Detection

Modern organizations employ robust monitoring tools like SolarWinds, Nagios, or LogicMonitor. These platforms alert IT staff the moment there’s anomalous behavior—packet loss, high latency, or total outages—so responders can act immediately. For example, Sainsbury's, the UK retailer, averted an all-day eCommerce blackout in 2022 thanks to automated alerting when their payment gateway failed.

Actionable Advice:

- Set up network baselining. Know what normal traffic looks like for faster anomaly detection.

- Deploy intelligent alerting—ensure the right people get urgent notifications, not just routine logs.

Building a Bulletproof Incident Response Plan

When the network fails, chaos can set in—unless everyone knows exactly what to do. A tailor-made, documented incident response plan ensures a clear path from outage to resolution.

Key Elements of an Effective Plan

-

Roles and Responsibilities: Designate primary decision-makers, technical leads, and communication officers.

-

Escalation Protocols: Define a hierarchy for problem severity and whom to contact at each tier. For critical systems, include third-party vendors’ emergency lines on your call list.

-

Checklist-Driven Playbooks: For every measured failure scenario (WAN outage, malware, physical line cut), have a stepwise checklist covering:

- Initial assessment steps

- Communication templates (for staff and clients)

- Temporary workarounds (e.g., failover to backup lines or cloud services)

-

Practice Makes Perfect: Run tabletop or live-fire drills at least quarterly. HSBC, for example, regularly recovers from simulated mobile banking outages—making real downtime manageable, not panic-inducing.

Tip: Teams who rehearse together recover faster. Consider forming a "recovery SWAT team" equipped for end-to-end triage and resolution.

Leveraging Redundancy and Failover Solutions

No single device or pathway should be a single point of failure. Modern IT infrastructure is built on layers of redundancy designed to keep the essentials working even when major components fail.

Essential Redundancy Strategies

- Redundant Switches and Routers: Dual-homed devices that automatically take over if a primary fails.

- Multiple Internet Connections: Leverage automatic failover between ISPs with technologies like SD-WAN.

- Cloud Failover: Keep critical applications replicated in multiple geographic regions.

- Server Clustering: Use load balancers and clustered servers—if one server crashes, others seamlessly continue.

Real-World Example:

A global law firm suffered a major ISP fiber cut. Thanks to SD-WAN and backup LTE modem connections, their team stayed online with only 30 seconds of disruption instead of a multi-hour outage.

Actionable Advice: Audit your current setup for single points of failure. Invest in hardware redundancy and regular failover testing.

Create an Effective Communication Plan

Communication is the linchpin of rapid recovery—not just inside your IT team, but across the wider organization, customers, and external stakeholders.

Key Steps for Successful Communications

- Internal Notifications: Rapidly alert support desks, department heads, and executives. Use preferred channels (Slack, Teams, SMS) for prompt delivery.

- External Stakeholder Updates: For major incidents, keep customers, vendors, and regulators informed. Use pre-approved email templates, status pages, or social media.

- Transparency: Be proactive about root causes, impact, and expected time to restore.

- Documentation: Keep a continuous incident timeline, documenting each decision and communication for later review or compliance needs.

Case Study:

During the infamous 2016 DDoS attack on Dyn (the DNS provider), Twitter, Reddit, and Spotify kept user trust high by pushing regular updates through their Twitter support pages. Being silent or vague compounds the harm.

Tips: Regularly update employee contact sheets and pre-draft key customer notification messages in advance.

Prioritizing Services During Restoration

When systems go offline, bringing everything up simultaneously is unrealistic. Smart recovery starts by triaging services according to their criticality to ongoing operations.

How to Triage Effectively:

- List All Network-Dependent Services: (Email, VoIP, ERP, payment processing, CRM, websites, etc.)

- Rank According to Business Impact: What processes are mission-critical? For hospitals, that might mean Electronic Health Record (EHR) systems; for retailers, POS (Point of Sale) networks.

- Recovery Sequencing: Bring back high-impact or revenue-producing systems first, then lower-priority workloads.

Example:

A manufacturing company faced a ransomware incident. Their playbook prioritized restoring ICS/SCADA networks (to keep the production lines running) before less vital systems like email. This decision minimized financial loss.

Having a predefined recovery priority list reduces pressure-induced errors and ensures what matters most is addressed first.

Accelerating Troubleshooting: Smart Tools and Efficient Techniques

Accurate, efficient troubleshooting can mean the difference between a 20-minute hiccup and a 6-hour meltdown. Today’s IT responders have powerful diagnostic allies—if they know how to deploy them effectively.

Must-Have Tools

- Network Mappers & Diagramming: Tools like NetBrain or Lucidchart give responders up-to-date visual maps, so changes and blowout points are easy to spot.

- Packet Analyzers: Wireshark, for instance, reveals anomalies, malicious traffic, or devices spamming the network with requests.

- Remote Management: Intelligent PDUs, out-of-band console servers, and KVMs let techs reboot or configure remote equipment instantly—even amid WAN failures.

Effective Troubleshooting Workflow

- Isolate the Failure: Use traceroutes and device pings to narrow down affected segments.

- Define Scope: What’s down? Offices, cloud services, specific apps, or endpoints?

- Hypothesize & Test: Check logs for error spikes or failed connections—then attempt targeted fixes before full-scale resets.

Insight:

Netflix’s famed “Chaos Monkey” injects deliberate failures to test and continually fine-tune their network recovery processes. Even modest IT teams can use lab-based simulations to train critical thinking and polish troubleshooting muscle memory.

Embracing Automation for Faster Network Restoration

Manual incident response can be slow and error-prone, particularly in high-pressure situations. Automation and self-healing networks are powerful accelerators for restoration.

Where Automation Helps Most

- Rapid Configuration Restoration: Scripts can reload configurations on failed routers and switches with a button press.

- Self-Healing Protocols: Modern SDN (Software Defined Networking) environments automatically reroute data around failed paths.

- Automated Patch Management: Vulnerabilities detected and remediated automatically eliminate downtime caused by delayed human intervention.

Example: Google’s data centers recover from localized router failures almost instantly thanks to BGP route automation and redundant virtual networks—no human needed for most routine problems.

Tips:

- Document and version control all network device configurations, making remote recovery straightforward.

- Use Infrastructure as Code (IaC) tools (like Ansible or Terraform) to standardize and automate repetitive restoration steps.

Testing, Drills, and Real-World Lessons

Even the world’s best-laid disaster recovery plans mean little without regular testing. When network trouble comes, teams should treat downtime drills with the gravity of a fire evacuation.

Running Effective Drills

- Simulate Different Scenarios: ISP outage, cyberattack, device failure, config errors.

- Involve All Stakeholders: Not just IT, but business owners, support teams, and even key vendors.

- Debrief and Improve: Document what didn’t work, update playbooks, and share lessons learned.

Example:

A global pharmaceutical company suffered minimal impact during the 2017 NotPetya malware outbreak. Their secret? Repeated cross-department recovery exercises yielding bulletproof communication, clear data backups, and laser-precise failover.

Action Items: Assign ownership for regular drills, keep records, and continually refine based on findings.

Post-Incident Reviews: Learning for the Next Outage

Every downtime incident offers valuable clues for resilience—as long as you capture and review them rigorously.

Conducting a Blameless Postmortem

- Gather The Facts: Timeline of events, root causes, what worked, what failed.

- Invite Honest Feedback: Encourage all involved to share observations, without fear of finger-pointing.

- Update Documentation: Refine incident playbooks and update baseline configurations/procedures.

- Track Metrics: How fast did you detect, respond, and fully restore? Set new benchmarks for next time.

Pro Example:

After a major service interruption in 2021, Slack posted a transparent (public!) postmortem. Their clear timeline and what-would-we-do-differently analysis fostered both in-house learnings and customer trust.

Tip: Schedule review meetings soon after restoration, when memories are fresh, and repeat the process periodically.

Network downtime, stressful as it can be, isn’t an if but a when. Still, by investing in layered prevention, rapid detection, bulletproof planning, and continual improvement, your organization can turn outages from disasters into manageable detours. Stay prepared, test often, and treat every incident as a stepping stone to stronger digital resilience.

Rate the Post

User Reviews

Popular Posts