Virtualization vs Physical Servers Which Wins for Security

17 min read Compare virtualization and physical servers to determine which offers the best security for your organization’s IT infrastructure. (0 Reviews)

Virtualization vs Physical Servers: Which Wins for Security?

In today's digitally driven landscape, securing business infrastructure is more complex than ever. The debate between virtualization and physical servers is not merely about speed and cost; it's increasingly about minimizing risks in a world of sophisticated cyberthreats. While both platforms underpin modern IT environments, their distinct architectures shape entirely different security considerations. Making the right choice means understanding these distinctions inside and out.

Understanding the Security Landscape in Modern IT

Let's set the stage: Enterprises worldwide are shifting traditional workloads away from dedicated physical servers to versatile virtualized environments. But at the heart of every IT decision is a crucial question: Which platform best protects business-critical data?

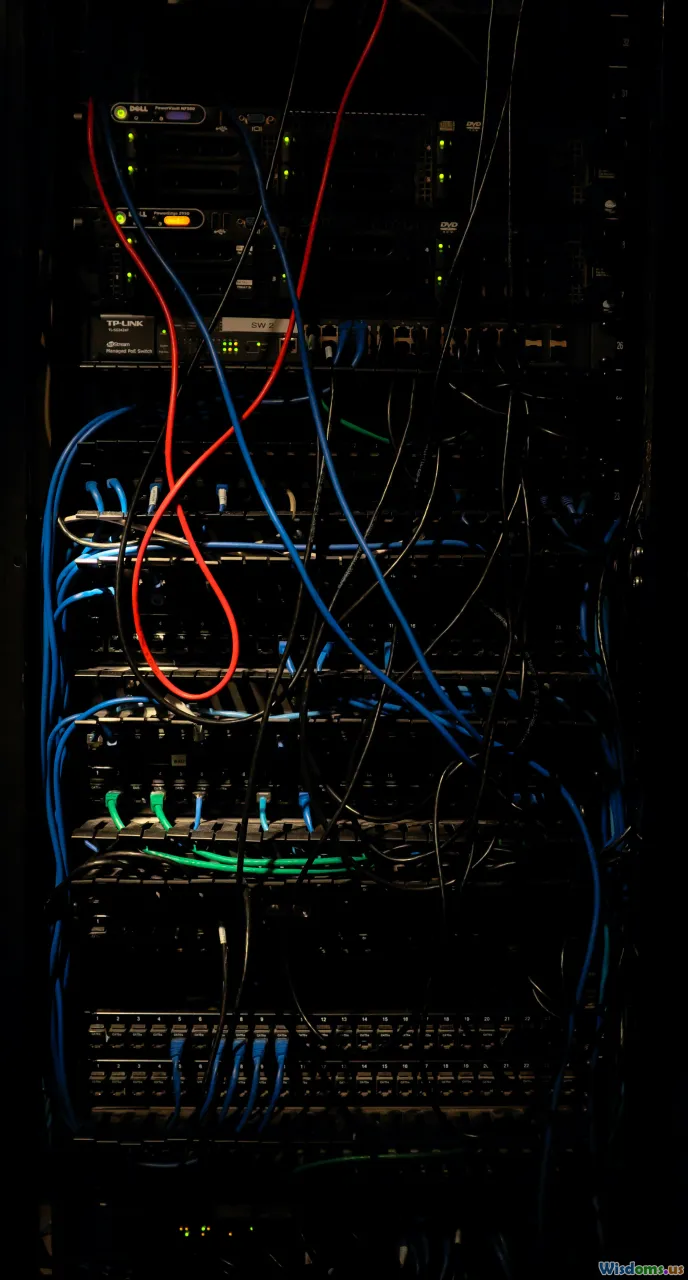

Physical servers have long been the bedrock of enterprise data centers, offering dedicated resources and isolation by design. Meanwhile, virtualized environments—enabled by hypervisors—permit multiple virtual machines (VMs) to run on shared hardware, delivering impressive flexibility and resource utilization. Each approach comes with successes—and vulnerabilities.

In 2023, for example, a report by IBM revealed that configuration errors within virtualized networks accounted for 19% of data breaches in large enterprises. Conversely, outdated firmware in isolated, older physical servers also contributed to major incidents—the legacy of a "set and forget" mindset. It's no longer enough to equate physical with safe, or virtual with exposed.

Isolation and Attack Surface: The Core Security Distinction

The primary defense any infrastructure offers is isolation. Physical servers inherently silo workloads; a compromise of one machine doesn't directly endanger others. This physical separation is a clear advantage for sectors where regulatory mandates demand air-gapped or strictly isolated systems—the financial and healthcare sectors, for instance, often opt for physical segregation for sensitive workloads. In 2022, 60% of financial institutions surveyed by Server Security Quarterly said they kept core transaction processing on physical hardware for this reason.

However, virtualization introduces increased complexity. Although hypervisors (like VMware ESXi, Microsoft Hyper-V, or KVM) provide logical boundaries between VMs, the hardware is shared. This shared environment potentially amplifies the attack surface. A threat actor compromising the hypervisor—through previously undiscovered vulnerabilities known as zero-days—can leapfrog between VMs. The notorious Venom vulnerability in 2015 exemplified this danger, allowing attackers to escape VM isolation by exploiting a flaw in virtual floppy drive code.

But it's not all doom for virtualization. Modern hypervisors undergo constant scrutiny and receive frequent security patches. Rigorous configuration (such as minimizing administrative access, strong network segmentation, and disabling unnecessary features) reduces exposure. The attack surface may be broader, but it can be hardened with a proactive approach.

Management and Human Error: Where Do the Greatest Risks Lie?

If attackers love anything more than vulnerable software, it’s human error. The complexity of managing dozens or even hundreds of virtualized workloads through centralized tools creates risk. One misconfigured virtual switch or inappropriate permissions assignment can expose multiple systems in one shot—an issue less likely in neatly compartmentalized physical builds.

For example, in 2021, an administrative mistake led to the misconfiguration of a critical virtual firewall at a European pharmaceutical firm. Rather than segmenting lab networks, all VM traffic was left accessible—a backdoor for ransomware to spread rapidly across dozens of research environments, shutting down operations for a week.

On the other hand, the distributed nature of physical servers means that manual configuration drift (inconsistent patch levels, forgotten user accounts) can add up, especially in environments lacking automated management. The 2017 WannaCry ransomware outbreak affected unpatched physical Windows servers in hospitals and manufacturing firms worldwide, underscoring that old-school hardware is just as susceptible to oversight as virtual machines.

Actionable advice: Whether you choose physical or virtual, invest in change management, regular audits, and automated configuration monitoring. Technology is only as strong as its stewards.

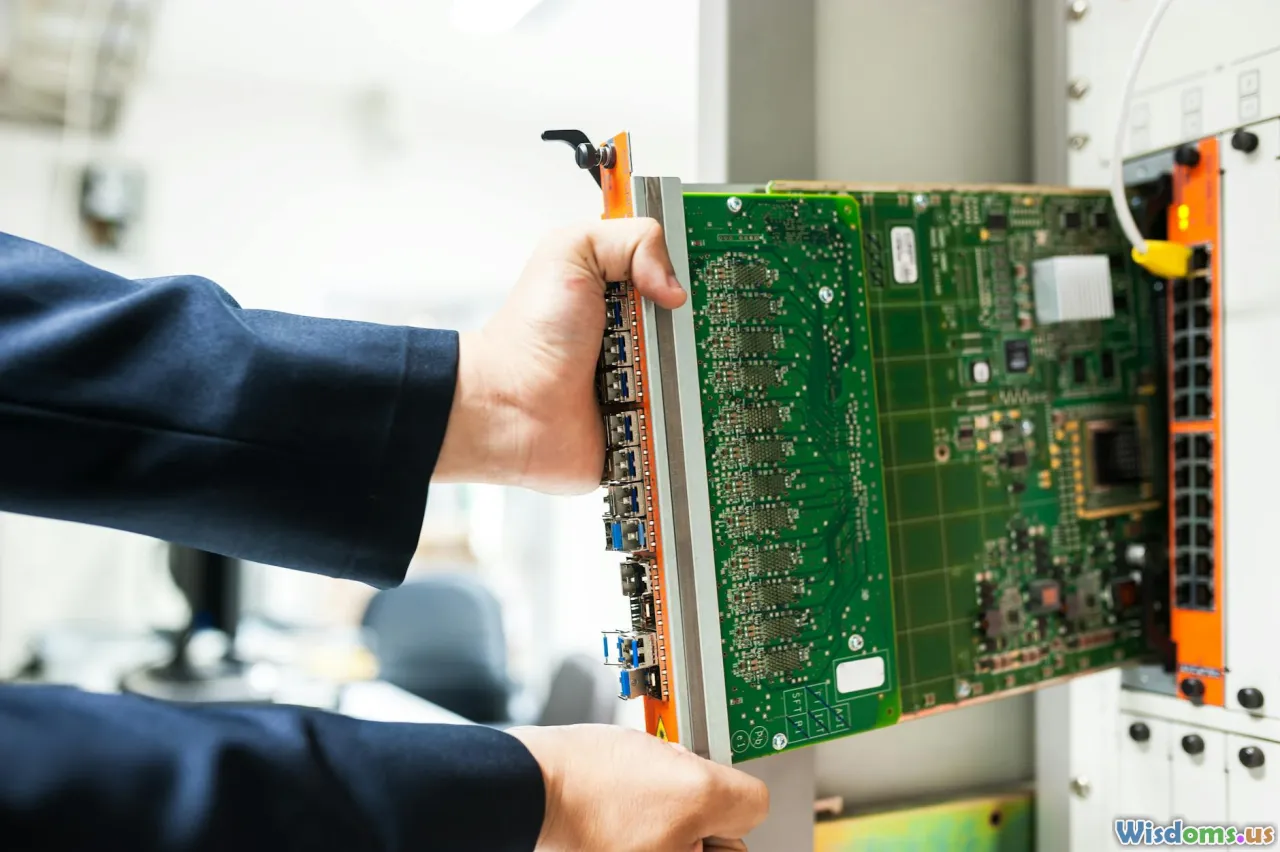

Performance, Patching, and Response Windows

When a critical vulnerability emerges, time is the enemy of security. Virtualization often comes out ahead here, thanks to the agility it affords. Quick snapshotting and cloning mean that IT teams can test patches on identical VMs before pushing them to production, reducing failure risks and downtime.

Physical servers, conversely, can't easily be snapshot, cloned, or rolled back. Hardware updates often necessitate prolonged maintenance windows. In sectors demanding 24/7 uptime, delays in patching can be disastrous—a fact highlighted by the infamous Spectre and Meltdown vulnerabilities in 2018, which affected hardware across thousands of physical servers and left organizations struggling to apply fixes while maintaining service levels.

But there’s a flip side: Virtual platforms are often a tempting target for attackers seeking persistence—malware can linger in templates, snapshots, or cloned VMs unnoticed. A compromised image might repeatedly infect successive machines unless organizations thoroughly manage their image lifecycle and clean up residual data.

Best practice: Implement robust patch management for both platforms. Frequent, automated monitoring for vulnerabilities and enforcing patch application timetables can dramatically reduce risk windows.

Virtualization-Specific Security Measures

To mitigate unique threats in virtualization, dedicated tools and practices have emerged. Microsegmentation—the practice of dividing VMs into secure enclaves with tightly monitored interactions—effectively limits lateral movement in case of a breach. Solutions from vendors like VMware NSX and Cisco Tetration enable this fine-grained policy enforcement.

Many hypervisors also offer secure boot and integrity monitoring features, ensuring that VMs start from trusted states and that unauthorized changes are swiftly detected. For cloud-based virtual workloads, leading platforms now integrate encryption at rest for virtual disks as a baseline security standard.

Additionally, role-based access control (RBAC) within management consoles restricts administrative capabilities to only what is necessary, taming the sprawl of privileged accounts.

Real-world example: A major APAC retailer leveraged microsegmentation after a phishing-driven ransomware attack within their private cloud spread only to a single VM—microsegmentation halted further movement, containing losses to a single line-of-business application.

Physical Server Security: The Value and Limits of Hardware Separation

Physical isolation, by definition, prevents many avenues of attack possible in shared environments. Unplugged from the network or separated by hardware firewalls, a physical server's compromise doesn’t put other workloads at immediate risk. For environments subject to the highest standards—defense, government, research labs handling classified material—no amount of virtual security can match physically air-gapped machines.

That said, physical servers are susceptible to attacks leveraging social engineering and physical access. A 2022 breach at a regional U.S. hospital began when an unauthorized individual accessed a data center, connecting a rogue device to a management port of an isolated server. These attacks bypass digital controls altogether.

And when hardware is outdated or falls outside replacement cycles, it can become a silent liability—containing unpatched firmware, weak out-of-band management, or deprecated hardware security modules (HSMs). Regular audits and strict chain-of-custody for replacement hardware are non-negotiable.

Pro tip: Pair hardware-based controls (locks, surveillance, tamper detectors) with policies dictating who can access, modify, decommission, or dispose of physical assets.

Virtualization in the Cloud Era: New Security Paradigms

Public and private clouds are powered by advanced virtualization—not merely using hypervisors but often layering containerization, serverless code, and orchestration (like Kubernetes) on top. Security here is shaped as much by service provider diligence as by in-house controls.

In multi-tenant environments, risks escalate: Misconfiguring network isolation can inadvertently expose your workload data to another customer's VM on the same host. Cloud security vendors, aware of these stakes, have advanced with dedicated protections—like hardware root of trust, tenant isolation mechanisms in silicon (ex: AWS Nitro, Azure Confidential Computing), and customer-managed encryption keys.

Yet even these measures require the customer to “harden the edges.” Cloud misconfigurations (think exposed S3 buckets or overly permissive firewall rules) are a leading cause of data breaches, often dwarfing any core hypervisor weaknesses. In its 2023 Cloud Security Report, McAfee reported that 83% of cloud data exposures stemmed from customer-side configuration errors—not hypervisor exploits.

Action item: Establish a continuous compliance monitoring solution for your cloud assets and regularly review provider security attestation (SOC 2, ISO 27001) for peace of mind.

Cost, Compliance, and Security: Weighing Strategic Priorities

Pragmatic decisions aren't made in isolation. Choosing physical or virtual infrastructure impacts not only security but also cost, regulatory compliance, and business agility.

Virtualization usually wins in cost effectiveness, thanks to consolidated hardware, automation, and energy savings. For organizations balancing confidentiality with rapid scaling—such as fintech startups or SaaS operators—virtual provides superior flexibility, provided security controls are mature and meticulously instituted.

However, compliance frameworks like PCI DSS, HIPAA, or GDPR sometimes dictate physical server use for "high assurance" zones, or at least demand specific controls be layered atop virtual deployments. Some organizations adopt hybrids—using physical servers for regulated workloads while virtualizing less-sensitive operations for scale and resilience.

Risk tolerance, compliance needs, and available expertise should all be part of your calculus. A 2021 survey by Gartner revealed that 74% of large enterprises now use a "security tiering" approach—deploying physical, hybrid, and virtualized assets according to threat profiling and legal exposure.

Hardening Both Worlds: Layered Security Strategies

No infrastructure is invulnerable—but robust, multi-layered defenses mitigate risks, whether your assets are virtual or physical.

Key strategies include:

- Network segmentation: Divide and isolate sensitive workloads—using VLANs for physical, microsegmentation for virtual.

- Patch automation and monitoring: Employ tools to auto-discover assets, monitor vulnerabilities, and ensure rapid patch cycles.

- Privileged access management: Limit who can administer systems through strict RBAC and just-in-time provisioning.

- Data encryption: Secure data at rest and in transit, with keys managed off-host when possible.

- Event monitoring and rapid incident response: SIEM platforms and MDR/XDR solutions enable swift detection and containment of intruders, regardless of underlying infrastructure.

Regular penetration testing—simulating both virtual and physical attack vectors—provides practical assurance. Whether it’s a hypervisor escape scenario or a physical cold-boot data extraction, red teams can reveal weaknesses before adversaries do.

So Which Wins? An Actionable Perspective

The answer isn’t clear-cut because it depends on your risks, compliance demands, and threat model. Virtualization excels in flexibility, rapid disaster recovery, and scalability. Modern hardened hypervisors, when paired with best practices, have proven trustworthy for the majority of business workloads. Physical servers remain king for absolute isolation, critical regulatory workloads, or when the stakes of shared platforms are simply too high.

Organizations are increasingly adopting a layered approach. By blending both models, assigning each to workloads by sensitivity and compliance, businesses secure their crown jewels while retaining the agility modern markets demand.

Ultimately, the "winner" comes down to alignment with your business’s unique exposure and compliance landscape. Security isn’t a destination—it’s an ever-evolving process, and both models have essential roles to play. Stay vigilant, keep learning, and tailor your security posture to the threats of tomorrow.

Rate the Post

User Reviews

Popular Posts