How Children Learning Unlocks Secrets For Machine Learning

35 min read Explore how insights from children's learning - curiosity, curriculum, and play - reveal strategies to build more data-efficient, robust machine learning systems. (0 Reviews)

Toddlers topple blocks, poke at puddles, and ask a thousand questions an hour. They learn before they can explain, generalize before they can justify, and adapt with almost no labeled data. Machine learning, by contrast, often gorges on terabytes, struggles off-distribution, and needs careful supervision to avoid brittle shortcuts. What if we treated children not as miniature adults, but as model teachers for our models? Child learning is a masterclass in efficient, robust, and flexible intelligence. Understanding their strategies can unlock practical secrets for building systems that learn more with less, remember without overfitting, and reason beyond correlation.

Below is an exploration of how children learn and what that implies for modern machine learning—from curiosity to causality, play to planning, and imitation to intent. Each section distills a concrete insight, anchored by examples and actionable guidance you can apply in your models today.

What Child Learning Reveals About Intelligence

Children do not optimize classification accuracy. They build a world model. Infants track objects even when out of sight, look longer when gravity seems to fail, and coordinate sight, sound, and touch to predict what comes next. These are not one-off tricks; they are the substrate of intelligence: perception, prediction, action, and abstraction working as a loop.

Psychologists have shown that babies are unsurprised by consistent physics (objects persist, don’t teleport) but are riveted by violations. This bias toward discrepancy—toward learning where the model fails—is strikingly similar to how data-efficient machine learners should behave.

Key properties of child learning that ML often lacks:

- Minimal supervision: Children learn faces, words, and rules from streams of raw experience without dense labels. Parallel in ML: self-supervised learning (predict masked words, reconstruct images) reduces the need for annotation.

- Online adaptation: Children update on the fly, not in epochs. Parallel: online and continual learning with replay buffers and dynamic regularization (e.g., elastic weight consolidation).

- Structured priors: Children assume objects, causes, and agents exist. Parallel: inductive biases such as object-centric representations, causal models, and hierarchical abstractions.

- Goal-directed exploration: Children ask questions, seek novelty, and exploit social cues. Parallel: intrinsic motivation, active learning, and imitation.

The practical goal is not to emulate every developmental nuance, but to import reliable principles that improve generalization and sample-efficiency in our systems.

Curiosity as a Learning Algorithm

Infants gaze longer at surprising scenes—say, a ball passing through a solid wall—then experiment to resolve the contradiction. This is curiosity as optimization: direct compute toward high information gain.

In machine learning, this translates to intrinsic motivation. When external rewards are sparse or deceptive, intrinsic rewards keep learning alive. Approaches include:

- Prediction error bonuses: Reward the agent proportionally to how poorly its world model predicts next states. Classic methods: Intrinsic Curiosity Module (ICM), Random Network Distillation (RND). These methods drive exploration in games like Montezuma’s Revenge.

- Disagreement and novelty: Maintain an ensemble of dynamics models and reward the agent when models disagree, a proxy for epistemic uncertainty.

- Compression progress: Reward decreases as the model gets better at compressing observations. Children likewise lose interest as surprises become predictable.

Practical tip: Bound curiosity. Unchecked, agents may chase noise. Combine intrinsic rewards with task rewards and add regularizers (e.g., penalize revisiting stochastic distractors). In robotic exploration, define safe novelty via constraints on joint torques or workspace boundaries. For vision-language models, use masked prediction difficulty or uncertainty as a curriculum signal to select harder spans over time.

Self-Supervision: Predict-the-Next Peek-a-Boo

Peek-a-boo is not just a party trick; it’s a predictive modeling game. Babies learn to anticipate faces and gestures, gradually encoding temporal patterns. They don’t get labels; they get sequences.

Self-supervised learning (SSL) operationalizes the same trick:

- Language: Predict masked tokens (BERT), next tokens (GPT). The model shapes its own labels from context.

- Vision: Contrastive learning (SimCLR) or masked autoencoders (MAE) learn robust representations by reconstructing or distinguishing views.

- Multimodal: CLIP aligns images with text via contrastive objectives, learning robust, transferable embeddings without manual labeling of categories.

What children show us:

- Learning from continuity: Context is a teacher. In videos, temporally close frames should be similar; use temporal contrastive losses.

- Anticipating consequences: Predict actions or future sensory inputs (predictive coding). World models like Dreamer learn latent dynamics by predicting future embeddings.

- Weak signals accumulate: Minor predictive tasks stacked over time (e.g., masked patches, audio denoising) yield strong features that transfer to downstream tasks.

How-to guide: If you’re starved for labels, start with SSL pretraining. Choose a pretext task aligned with your downstream modality (e.g., masked language modeling for text, masked autoencoding or contrastive augmentations for images). Then fine-tune minimally. This mirrors how children first learn general patterns before specializing.

Active Learning and Joint Attention

By 9–12 months, infants follow a caregiver’s gaze and respond to pointing; by 18 months, they point and vocalize to request information. Developmental studies (e.g., Tomasello’s work on joint attention) show that social cues drastically accelerate word learning and concept formation. The child does not passively absorb; they sample the world selectively.

Active learning implements the same idea:

- Query strategies: Uncertainty sampling, expected error reduction, expected model change—ask for labels where they matter most.

- Teaching with hints: Provide sparse, high-value supervision (e.g., bounding boxes instead of full masks, or labels for prototypical samples) to guide inductive biases efficiently.

- Interface design: Build annotation tools that surface high-impact, high-uncertainty items to human experts. Feedback loops shorten data collection by orders of magnitude.

Example: Training a medical image classifier with limited radiologist time. Use an active learner to select scans whose predicted posterior is most uncertain or where ensemble disagreement is high. Add a few expert labels each cycle, retrain, and repeat. This mimics a child asking focused questions.

Tip for practitioners: Combine joint attention with model introspection. Show saliency maps or exemplar neighbors to the expert when querying a label. Experts often change their minds when they see what the model is “looking at,” analogous to a child checking whether the adult and child share common reference.

Fast Mapping and Few-Shot Learning

Children can learn a new word from a single exposure, a phenomenon called fast mapping. In one study, a child who knows words for familiar objects hears, “Can you hand me the dax?” With two objects present, the child infers that the unfamiliar object is “dax.” This inference exploits mutual exclusivity: assume each object has one label. Another powerful bias is the shape bias: children map nouns to object shapes more than to color or texture.

Machine learning parallels:

- Metric-learning and prototypes: Few-shot learners like Prototypical Networks represent each class by a prototype in embedding space. With one example (one-shot), the system can classify by nearest prototype.

- Meta-learning: Algorithms such as MAML, Reptile, and Proto-MAML learn to learn—initializing parameters so the model can adapt to new tasks with a handful of gradient steps.

- Bayesian priors: Encode mutual exclusivity by penalizing reused labels or encouraging diversity in label assignment for novel clusters.

Practical recipe for quick gains:

- Pretrain an embedding with SSL or supervised multi-class data.

- Fit a simple classifier (nearest neighbor or prototypes) over few-shot supports.

- Add structure: if the domain is objects, bias the embedding to be shape-aware (e.g., train with augmentation that preserves shape but changes color/texture).

- For language grounding, use cross-situational constraints—tie words to visual clusters via weak co-occurrence signals.

This mirrors children: rich prior, constrained inference, and sample-efficient generalization.

Play, Exploration, and Safe Risk

Play looks purposeless but is an engine for learning. Children test affordances: What can be squeezed, rolled, stacked? They overgeneralize then refine.

In reinforcement learning, play corresponds to exploring state-action space beyond immediate rewards:

- Count-based or density bonuses encourage visiting novel states.

- Entropy regularization in policy gradients (e.g., PPO with entropy) keeps the agent from collapsing early into suboptimal habits.

- Parameter-space noise (NoisyNets) avoids noisy observation pitfalls by diversifying policies themselves.

But children are not reckless. They take safe risks under supervision. Similarly, we should bound exploration with safety constraints:

- Risk-sensitive RL: Use constraints (CVaR, Lagrangian methods) to cap downside risk while exploring.

- Shielding and fail-safes: In robotics, combine learned policies with classical safety monitors (e.g., barrier functions).

- Curriculum of hazards: Gradually introduce complexity and stochasticity, like a playground with increasing challenge.

A practical pattern: Sandbox pretraining. Let an agent learn dynamics and affordances in a simulated playground (procedurally generated tasks, random textures), then transfer to real tasks with limited fine-tuning. This reduces catastrophic failures and mimics the way kids master dexterity before handling delicate tools.

Causality: The Blicket Detector and Counterfactuals

In Alison Gopnik’s classic “blicket detector” experiments, children learn which blocks trigger a machine to light up and play music. By observing combinations and interventions—placing blocks on the machine—they infer causal structure: which blocks are causal, when effects combine, and when one blocks another.

This is more than association. Children reason with counterfactuals: If I had put only the red block, would it light? They learn stable causal relations that transfer across contexts.

Machine learning needs the same upgrade:

- Causal discovery: Algorithms like PC, GES, and NOTEARS infer causal graphs from data under assumptions. While imperfect, they lay foundations for intervention planning.

- Invariant learning: Methods such as IRM (Invariant Risk Minimization) aim to learn features predictive across environments, not just within one dataset. Children generalize because causes are stable; spurious correlations are not.

- Counterfactual reasoning: Structural causal models allow models to answer “what if?” questions, crucial for fairness and reliability.

Applied example: A click-through prediction model may learn that a particular background color correlates with clicks (spurious). Vary background across environments during training and enforce invariance to background features; the model will focus on content—the causal signal.

How-to: If you can intervene, do it. Randomize nuisance factors (lighting, fonts, backgrounds), collect data from diverse environments, and evaluate with domain shifts. When intervention isn’t possible, simulate shifts via augmentation and use techniques like group DRO to minimize worst-case risk.

Compositionality: Building with Blocks, Not Blobs

Children combine words and actions into larger structures: “Where did the red ball go after I moved the box?” They understand objects, attributes, relations, and operations—and they compose them. Benchmarks like SCAN and gSCAN show that many ML models struggle with compositional generalization: they memorize seen templates but fail on new combinations.

Paths forward:

- Modular architectures: Neural Module Networks wire modules for attributes (red), relations (left of), and operations (count). The system composes subskills, improving systematic generalization.

- Object-centric representations: Slot Attention and related methods parse scenes into object slots, enabling combinatorial recombination of entities.

- Program induction: Systems like DreamCoder learn libraries of reusable functions, mirroring how children chunk and reuse procedures.

- Concept bottlenecks: First predict interpretable concepts (color, shape), then predict targets. This enforces explicit compositional structure.

Practical tip: Bake in structure that mirrors the world’s compositionality. For vision-language tasks, train models to answer questions via explicit object features and relational reasoning (e.g., using scene graphs). For robotics, decompose tasks into reusable skills (grasp, rotate, place) composed via high-level policies.

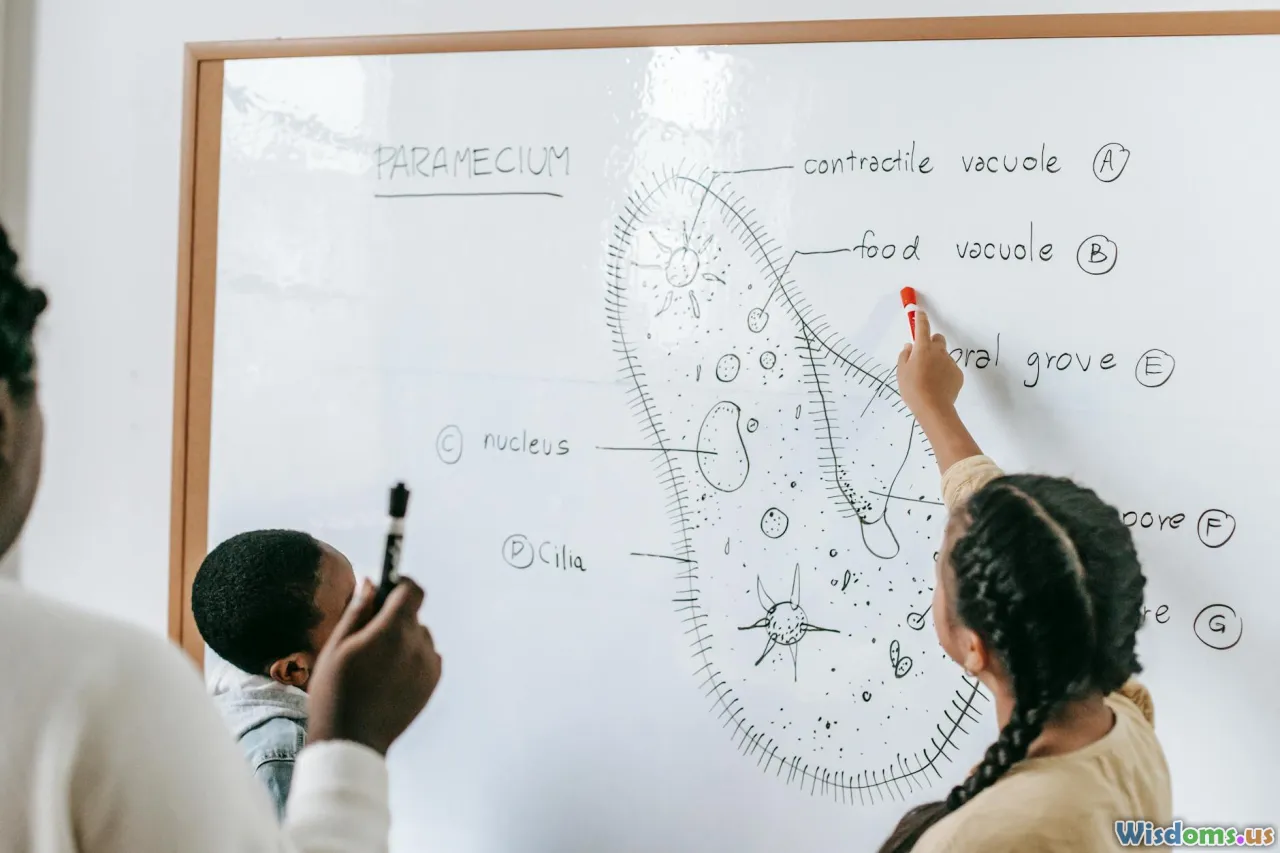

Curriculum and Scaffolding: Teaching Machines Like Tutors

Lev Vygotsky’s “zone of proximal development” (ZPD) suggests learners thrive with tasks just beyond their current mastery, aided by scaffolding. Good teachers sequence examples, feedback, and hints—raising difficulty as competence rises.

In ML, curriculum learning accelerates convergence and boosts stability:

- Example ordering: Start with easy examples, then gradually increase difficulty. For NLP, begin with short sentences and common vocabulary. For RL, start with shorter horizons or shaped rewards.

- Automated curriculum: Algorithms like ALP-GMM or teacher-student methods adapt difficulty based on learner progress. PAIRED adversarial curricula generate tasks that are challenging but solvable.

- Scaffolds: Hints like partial labels, demonstrations, subgoal markers, or constrained action spaces act as training wheels.

Implementation plan:

- Define a difficulty metric (e.g., entropy of model predictions, time-to-success in RL).

- Maintain a task pool with varied difficulty. Sample tasks near the ZPD frontier.

- Provide temporary scaffolds (demonstrations, subgoals). Gradually remove them (scheduled ablation).

- Monitor transfer by periodically testing on harder, unseen tasks to ensure genuine competence.

This strategy transforms brittle learners into resilient ones, just like moving a child from tracing letters to composing stories.

Multimodal Integration: Seeing, Hearing, Touching

Children align sounds with moving lips, recognize an object by feel, and connect words to visuals. They are natural multimodal learners.

Modern multimodal ML mirrors this through:

- Contrastive alignment: CLIP and similar models pair images and text, learning shared spaces where semantics align across modalities.

- Temporal grounding: Video-language models align narration with visual events, enabling instruction following.

- Sensor fusion: In robotics, combining vision, proprioception, and touch improves manipulation reliability under occlusions.

Concrete design tips:

- Keep each modality honest: During training, hide one modality sometimes and predict it from others. This builds redundancy and robustness.

- Synchronize carefully: Align streams temporally; small misalignments degrade performance. Use cross-attention to fuse features.

- Exploit weak labels: Captions, subtitles, and audio transcripts are abundant. Use them as implicit supervision.

An example pipeline: Pretrain an image-text encoder with web-scale data (contrastive loss). For a robot, add a small tactile encoder trained to predict slippage events. Fuse them via cross-attention in a policy network that follows language commands to manipulate objects. The result is a system that can “feel” when to adjust grip, “see” where to move, and “understand” what to do.

Memory, Sleep, and Replay

Children nap—and remember better afterward. Neuroscience shows that sleep supports consolidation: hippocampal replay of recent experiences strengthens cortical representations. Children’s memories stabilize and generalize across contexts after rest.

ML equivalents:

- Experience replay: DQN’s replay buffer and prioritized replay sample past transitions to stabilize learning.

- Offline RL: Learn from logged datasets without interacting, akin to “sleep-learning.”

- World models and imagination: Dyna-style methods and Dreamer roll out trajectories in latent space—mental simulation for practice without real-world risk.

- Continual learning: Prevent catastrophic forgetting with replay (real or generative), regularization (EWC, SI), or modularization.

Practical advice:

- Schedule pauses: Insert “sleep phases” where you train only the model’s predictive head on stored representations or perform self-distillation. This consolidates without overfitting to fresh noise.

- Keep diverse memory: Use reservoir sampling or cluster-aware buffers to retain rare but informative experiences.

- Balance plasticity and stability: Adjust learning rates by layer; keep low-level perceptual layers stable while allowing high-level decision layers to adapt quickly.

Social Learning, Imitation, and Intent

Children copy, but not blindly. They imitate actions when those actions plausibly lead to goals, and sometimes they skip irrelevant steps—“rational imitation.” Yet, in certain contexts, they overimitate, copying even causally irrelevant gestures to match social norms.

In ML, imitation learning (IL) and inverse reinforcement learning (IRL) capture these dynamics:

- Behavior cloning (BC): Learn a policy by supervised learning on expert trajectories. Fast, but prone to covariate shift.

- DAgger and friends: Aggregate data by querying the expert for corrections when the learner visits novel states.

- IRL: Infer the reward function that explains expert behavior, then optimize it. This generalizes better under distribution shifts.

- Preference learning and RL from human feedback (RLHF): Learn rewards directly from human comparisons rather than demonstrations, capturing intent rather than rote behavior.

Application tip: Layer social signals. Combine a small number of demonstrations (for basic competence) with preference feedback (to align behavior with intent), plus explicit constraints (safety). In robotics, ask humans to label which of two grasps is more “confident” or “gentle” to shape nuanced objectives that are hard to encode numerically.

Errors, Biases, and Robustness

Children say “goed” instead of “went.” They overgeneralize a rule, then correct it with more exposure. They also fall for optical and cognitive illusions. These missteps are not failures of intelligence—they are milestones of learning rules and testing boundaries.

For ML systems, the lesson is twofold:

- Error patterns reveal structure: Systematic mistakes indicate the model’s current hypothesis class. Use error analysis to adjust inductive biases.

- Robustness is learned: Models need to face perturbations, shifts, and adversarial settings to become reliable.

Actionable strategies:

- Calibrate uncertainty: Use temperature scaling, ensembles, or Bayesian approximations. Children “know when they don’t know”; models should, too.

- Domain randomization: Vary textures, lighting, and layouts in training to reduce overfitting to spurious cues.

- Adversarial training-lite: Include small, plausible perturbations (viewpoint changes, color shifts) to immunize against common failures without full adversarial budgets.

- Label noise handling: Children can ignore inconsistent testimony if it conflicts with most of their experiences. Adopt robust losses or label smoothing to tolerate noise.

Evaluate with targeted tests: If you train a vision system on cats vs. dogs, also test on sketches, stuffed animals, and partial occlusions. For language models, test paraphrases and dialects, not just curated datasets.

Developmental Benchmarks for Machine Learning

To borrow from child learning seriously, evaluate like a developmental scientist. Instead of measuring only final accuracy on a fixed test set, probe for generalization, causal inference, and compositionality.

Useful benchmarks and ideas:

- CLEVR: Tests compositional visual reasoning with controlled scenes. Good for measuring attribute and relation understanding.

- ARC (Abstraction and Reasoning Corpus): Assesses few-shot program-like generalization over grid tasks.

- gSCAN and SCAN: Evaluate compositional generalization in grounded language commands.

- IntPhys: Probes intuitive physics understanding by presenting expected vs. impossible events in videos.

- BabyAI: Curriculum-based instruction-following in grid worlds, inspired by how children learn language and tasks.

- Procgen: Measures generalization across procedurally generated levels rather than memorized maps.

- BabyLM Challenge: Focuses on language learning from smaller human-scale corpora, emphasizing efficiency and cognitive plausibility.

Design your own developmental probes:

- Violation-of-expectation tests: Create scenarios where spurious patterns fail; check if the model registers surprise (higher loss, uncertainty) and adapts.

- Few-shot transfer: Withhold compositions during training, then test on new combinations with minimal labeled support.

- Intervention sensitivity: Evaluate how predictions change when you manipulate causal factors.

Designing Child-Inspired ML: A Practical Checklist

Turn insights into a concrete plan. Use the following checklist to build child-inspired systems:

- Data and Objectives:

- Start with self-supervision. Use masked prediction, contrastive learning, or autoencoding on raw streams.

- Define intrinsic objectives (curiosity bonuses, compression progress) to bootstrap exploration.

- Architecture and Priors:

- Adopt object-centric or modular components when tasks are compositional.

- Include causal inductive biases where possible (e.g., structured graphs, invariant features).

- Learning Process:

- Sequence a curriculum from easy to hard tasks. Automate difficulty using learner progress.

- Interleave “sleep phases” (offline replay, self-distillation) to consolidate.

- Use active learning to prioritize human labeling effort.

- Social and Safety Layers:

- Pair demonstrations with preference feedback to capture intent.

- Add constraints and monitors to bound exploration.

- Evaluation:

- Measure calibration and OOD performance, not just in-domain accuracy.

- Run developmental probes: compositional generalization, intervention sensitivity, and violation-of-expectation tests.

Each checklist item maps to a child-like capability: curiosity, play, scaffolding, social learning, and robustness under change.

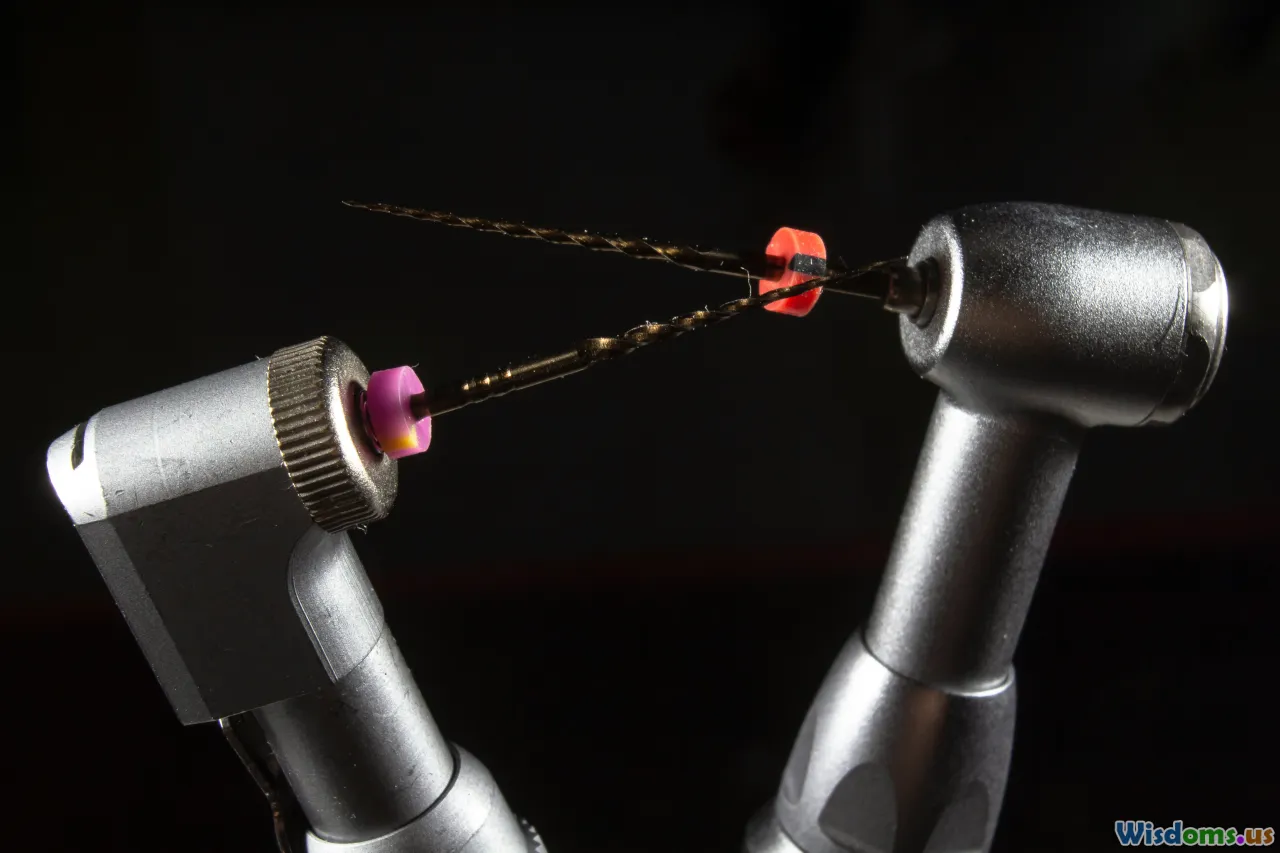

Case Study: From Toy Play to Tool Use in a Robot

Imagine a tabletop robot learning to use a spatula to slide a pancake-shaped object onto a plate. Instead of hard-coding the sequence, we can design a developmental path.

- Pretraining via self-supervision

- Record hours of unscripted play: the robot randomly manipulates objects, pushes, pokes, and grasps within safety limits. Capture multimodal streams (RGB-D, proprioception, tactile).

- Train a world model to predict next-frame embeddings and tactile events (slip, contact). Use masked visual modeling and temporal contrastive losses.

- Curriculum of affordances

- Start with easy primitives: detect edges, slide flat objects, and stop when tactile slip rises.

- Introduce tools gradually: a blunt stick first, then the spatula. The robot learns that the tool extends reach and can reduce friction when angled.

- Imitation plus preferences

- Provide a few human demonstrations of the ideal trajectory: insert spatula, tilt, lift slightly, and slide.

- Gather human preferences over short clips (A vs. B): which attempt was smoother, safer, or caused less deformation? Train a reward model.

- Active data collection

- The robot proposes uncertain states (e.g., novel object shapes) and asks for minimal labels (was this a successful scoop?). It also requests targeted demonstrations when the policy entropy is high.

- Safe exploration

- Constrain force and velocity; introduce virtual walls to prevent tipping objects off the table.

- Use risk-aware policy optimization to trade off exploration and safety.

- Consolidation and transfer

- Alternate online practice with offline “sleep”: replay difficult episodes to refine the world model and policy.

- Transfer to new pancakes and plates with different textures by relying on tactile cues and invariant features.

Outcome: With a fraction of human labels and no brittle scripts, the robot acquires tool-use skills that generalize. The combination of curiosity, curriculum, multimodal integration, and preference learning mirrors the way a child learns to use a spatula while helping in the kitchen.

Open Questions and Research Frontiers

Child-learning analogies are powerful but incomplete. Several frontiers invite deeper work:

- Calibrated curiosity: How do we formalize curiosity that seeks information but avoids distraction? Balancing epistemic uncertainty against aleatoric noise remains challenging.

- Teaching signals: What is the best mix of demonstrations, preferences, and natural language instruction? How do we scaffold complex, long-horizon skills efficiently?

- Theory of mind: Children infer others’ beliefs and intentions by preschool. Building agents that reason about other agents’ mental states could improve collaboration and negotiation.

- Grounding language: Children ground words in perception and action. We need models that link abstract language to concrete affordances robustly across contexts.

- Energy and efficiency: Children learn with roughly 20 watts of power. Designing algorithms and hardware that approach human energy efficiency is an open engineering quest.

- Ethics and alignment: The social dimension of child learning—norms, fairness, and empathy—suggests a broader view of alignment than reward maximization. How do we encode and audit values in learning systems?

These questions are not merely philosophical; they shape the core of next-generation ML systems.

A Story to Remember: The Block That Didn’t Fit

A child tries to fit a square block into a round hole. Push, twist, push again. Then they pause, look around, and pick up a different block. Without lectures, they learned a constraint, inferred a causal relationship, and revised their plan. If you slowed the scene and annotated it with ML concepts, you would find everything we’ve discussed: hypothesis testing (will this shape fit?), curiosity (what happens if I twist?), active learning (try the most uncertain action), invariance (holes don’t change shape), and curriculum (start with larger holes, later smaller tolerances).

When your model hammers a square solution into a round dataset, it signals a design mismatch. Look for a better fit: more structure in representations, better alignment of objectives, or a curriculum that prepares it for the target distribution.

Bringing It All Together

Children learn by predicting, playing, asking, imitating, and reflecting. They bind modalities into a coherent world model, leverage social cues to accelerate learning, and develop causal and compositional understanding that transfers.

For practitioners, the blueprint is surprisingly actionable:

- Start with rich self-supervised pretraining to form durable representations.

- Drive exploration with bounded curiosity and play in safe sandboxes.

- Build modular, object-centric models that support composition and intervention.

- Adopt curriculums and scaffolds, then phase them out as competence rises.

- Rely on active learning and social feedback to focus sparse human effort where it counts.

- Evaluate developmentally: test invariance, compositionality, and counterfactual reasoning—not just static accuracy.

The reward is not only better numbers; it’s robustness and interpretability. Child-inspired ML systems are more likely to say “I don’t know,” adjust on the fly, and generalize gracefully when the world looks different tomorrow.

Children are the proof that general intelligence can be sample-efficient, resilient, and grounded. If we learn from their learning—patiently, structurally, and playfully—our machines will not just get smarter; they will get wiser about how they learn.

Child Development Artificial Intelligence Education Cognitive Science Machine Learning Active Learning Multimodal Learning Brain Science & Neuroscience Brain and Artificial Intelligence Transfer Learning Reinforcement Learning causal inference curriculum learning self-supervised learning few-shot learning inductive biases intuitive physics human-AI alignment

Rate the Post

User Reviews

Other posts in Artificial Intelligence

Popular Posts