Top Challenges When Translating Synaptic Connections Into Code

9 min read Explore the top hurdles in encoding synaptic connections into code and why bridging biology and computation remains complex. (0 Reviews)

Top Challenges When Translating Synaptic Connections Into Code

Introduction

The human brain is nature's masterpiece of computation — a dense network comprising roughly 86 billion neurons interconnected by trillions of synapses. Translating this intricate web of synaptic connections into code for simulation or artificial intelligence pursuits is a feat that both fascinates and frustrates neuroscientists, computer scientists, and AI researchers alike. But why is it so challenging to capture the essence of these connections in digital form? What limits do we face beyond sheer data scale? Understanding these hurdles is critical to advancing brain-inspired technologies and deepening our knowledge of cognition.

This article explores the top challenges inherent in converting synaptic connections into computational code. From the granular complexity and biological variability to dynamic environments and the limitations of current computational resources — we cover the key barriers with insights and examples.

Understanding Synaptic Complexity

The Extraordinary Granularity

Synapses aren’t just static connections; each synapse exhibits a rich landscape of structural and functional diversity. For example, synaptic strength—a key parameter that determines signal transmission efficacy—can vary dramatically even between neurons of the same type. Additionally, synapses involve myriad molecular and biochemical processes such as neurotransmitter release probability, receptor dynamics, and plasticity mechanisms like long-term potentiation (LTP) and depression (LTD).

Capturing this complexity requires multi-scale modeling that can integrate molecular details with network-level behavior. Attempting to represent all these parameters precisely in code quickly becomes impossible at a brain-wide scale due to sharply escalating data requirements and computational expense.

Variability and Plasticity

No two synapses are identical in structure or function. For instance, in the hippocampus, synaptic configurations differ spatially and temporally, reflecting an ever-evolving plastic landscape critical for learning and memory. Implementing this variability demands adaptive models that account for stochastic changes — but designing flexible code incorporating both variability and controlled reproducibility remains an ongoing challenge.

Experimental efforts, like the Blue Brain Project, underscore this struggle, as they balance biologically plausible variability with the computational tractability needed for large-scale simulations.

Data Acquisition and Fidelity Challenges

Incomplete and Noisy Data

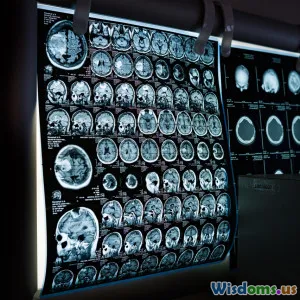

Mapping synaptic connections accurately requires extensive experimental data from techniques like electron microscopy (EM) reconstruction or electrophysiological recordings. However, these methods come with trade-offs: EM, while ultra-high resolution, is slow and labor-intensive, resulting in incomplete or partial connectomes.

Additionally, noise in recording adds ambiguity. Attempting to encode partial or noisy data into models risks omitting essential characteristics or introducing errors that propagate throughout simulations, undermining validity.

Scaling Up: From Microcircuits to Whole Brain

While datasets of small circuits can be reliably captured and coded, scaling to entire brain regions—or entire brains—is orders of magnitude harder. The mouse brain, for example, contains around 70 million neurons and more than a trillion synapses—a data magnitude that current data acquisition methods and storage capabilities strain to encompass.

Consequently, scientists often rely on statistical or probabilistic models to approximate connectivity patterns, introducing abstraction layers in code that may suppress fine-grained biological details.

Modeling Dynamics and Temporal Complexity

Dynamic Synaptic Behavior

Synapses are not mere wired connections but dynamic entities constantly adapting based on previous activity and environmental stimuli. Capturing temporal dynamics involves modeling synaptic transmission delays, changing neurotransmitter release, receptor kinetics, and activity-dependent plasticity.

Integrating such dynamics demands complex differential equations or spiking neural network models with temporal parameters. For example, the Hodgkin-Huxley model for neuronal firing or the Tsodyks-Markram model for synaptic plasticity provide biologically realistic equations but require immense processing power, especially at scale.

Balancing Realism and Computability

Due to computational constraints, many models simplify dynamics, sacrificing biological fidelity for efficiency. The classic integrate-and-fire neuron model is computationally light but abstracts away detailed synapse behavior.

Striking a balance between computational tractability and biological realism remains a cornerstone challenge. Choosing the right granularity to represent synaptic function influences the validity and applicability of simulations—critical for areas like neuroprosthetics or AI architectures.

Computational and Resource Limitations

Processing Power and Storage

The computational cost to simulate brain-scale synaptic connectivity is staggering. Large-scale projects like the Human Brain Project or IBM's Blue Brain require supercomputers with dedicated hardware. Even then, running real-time simulations of entire neural networks with detailed synaptic modeling is often infeasible.

Storage is another bottleneck; archiving detailed synaptic data at molecular resolution demands petabyte-scale resources, necessitating efficient data compression and management strategies.

Software and Algorithmic Challenges

Writing scalable, efficient code to handle synapse modeling goes beyond raw power. It requires sophisticated algorithms that can optimize memory usage, parallelize workloads, and manage numerical stability.

Neural simulation frameworks such as NEURON or NEST aim to provide such capabilities but still face hurdles when integrating full synaptic complexity. Many researchers resort to surrogate models or machine learning to circumvent direct simulation costs.

Insights from Interdisciplinary Approaches

Advances from Machine Learning and AI

Interestingly, artificial neural networks (ANNs) only loosely mimic synaptic connectivity—weights in these models abstract away the biological detail of synapses but still harness the brain’s efficient parallelism and learning dynamics.

Recent research into spiking neural networks (SNNs) attempts to bridge the gap, incorporating temporal and synaptic dynamics closer to biological neurons. However, successfully coding intricate synaptic mechanics in SNNs that scale remains an open research frontier.

Lessons from Connectomics

Connectomics—the comprehensive mapping of neural connections—has revolutionized our understanding of brain wiring. Projects like the MICrONS initiative illuminate detailed microcircuitry that informs more accurate coding strategies.

Yet, as datasets grow, integrating them into code while preserving biological context challenges software design and validation methodologies alike.

Conclusion

Translating synaptic connections into computational code is a fascinating but formidable challenge due to biological complexity, variability, data limitations, modeling dynamics, and resource constraints. Successfully bridging this gap promises tremendous advances—from brain-mimicking AI technologies to understanding diseases affecting neural connectivity.

Continuous progress hinges on interdisciplinary collaboration, combining neuroscience insight, computational power, and algorithmic innovation. As we decode nature’s most sophisticated network, future breakthroughs will likely emerge from hybrid models balancing biological fidelity with computational efficiency.

Embracing these challenges invites the scientific community to reimagine coding paradigms for representing life’s most intricate connection system—one synapse at a time.

References:

- Markram, H. et al. (2015). Reconstruction and Simulation of Neocortical Microcircuitry. Cell, 163(2), 456-492.

- Blue Brain Project. (n.d.). Neuroscience Simulation Platform. https://www.epfl.ch/research/domains/bluebrain/

- Kasthuri, N., et al. (2015). Saturated Reconstruction of a Volume of Neocortex. Cell, 162(3), 648–661.

- Gerstner, W., & Kistler, W. M. (2002). Spiking Neuron Models: Single Neurons, Populations, Plasticity. Cambridge University Press.

- MICrONS Project. (n.d.). Machine Intelligence from Cortical Networks. https://microns-explorer.org/

Rate the Post

User Reviews

Other posts in Artificial Intelligence

Popular Posts