Building a Virtual Classroom From Scratch Step By Step

32 min read A step-by-step blueprint for building a virtual classroom—from platform selection and content design to live sessions, assessment, accessibility, and analytics—geared for schools, trainers, and enterprises. (0 Reviews)

You don’t need a massive engineering team or a seven‑figure budget to build a high‑quality virtual classroom. What you do need is a clear plan, a deliberate scope, and smart technology choices that prioritize the learning experience over novelty. In this step‑by‑step guide, we’ll walk through how to design, architect, and launch a virtual classroom from scratch—covering everything from pedagogy and user experience to WebRTC video, interactive tools, accessibility, analytics, security, and scale. Each section includes practical examples, trade‑offs, and actionable tips you can apply immediately.

Step 1: Clarify the Learning Goals and Constraints

Before a line of code is written, define what “learning success” means for your audience and your institution.

- Identify personas and use cases:

- K‑12 synchronous classes with strict attendance rules and parental permissions.

- University seminars needing breakout rooms and recorded lectures.

- Corporate training requiring compliance tracking and SCORM/xAPI reporting.

- Bootcamps emphasizing pair programming and live code review.

- Clarify constraints:

- Class size: 1:1 tutoring, small groups (≤25), large lectures (≥100).

- Bandwidth realities: rural learners, mobile‑only students, corporate VPNs.

- Compliance: FERPA (US education records), COPPA (children’s data), GDPR (EU private data), and regional data residency requirements.

- Devices: Chromebooks in K‑12, iPads for primary, desktop‑focused for enterprise.

- Define outcomes:

- Synchronous engagement: talk time balance, chat participation, polls completed.

- Asynchronous progress: modules completed, quiz mastery, time‑on‑task.

- Teacher success: time saved on grading, ease of materials reuse, attendance automation.

Deliverables for Step 1:

- A 1‑page Product Requirements Doc (PRD) with your MVP features, non‑goals, and success metrics.

- A learner journey map from signup to certificate.

- A risk register (e.g., “mobile Safari screen‑share limitations,” “low bandwidth cohorts”).

Example: A 20‑student language class where the MVP must include: low‑latency video with breakout rooms, real‑time text chat, a collaborative whiteboard, recordings, and weekly quizzes. Success metric: 80% attendance average and 90% quiz completion within 48 hours after class.

Step 2: Map Core Features Into a Minimal Viable Classroom

Resist the urge to build everything. A focused MVP lets you deliver value quickly and learn from real classrooms.

Must‑have features for most classrooms:

- Real‑time video/audio (group calls, mute controls, screen share)

- Chat (text, emojis, moderation, file attachments)

- Whiteboard or collaborative canvas (draw, type, shapes)

- Content library (upload PDFs/slides, embedded links)

- Assignments and quizzes (with basic grading)

- Scheduling and attendance tracking

- Recording (cloud storage, consent prompts)

- Authentication (SSO optional), roles (Admin/Teacher/Student)

Nice‑to‑have later:

- Breakout rooms, polls, hand‑raise, reactions

- LTI 1.3 integration for LMS systems

- SCORM/xAPI import/export

- Advanced analytics and automated summaries

- Proctoring capabilities

Prioritization example using a lightweight RICE approach:

- Video/Audio (Reach: High, Impact: High, Confidence: High, Effort: High) → MVP

- Chat (High, Medium, High, Low) → MVP

- Whiteboard (Medium, Medium, Medium, Medium) → MVP

- Scheduling (High, Medium, High, Low) → MVP

- Breakouts (Medium, High, Medium, Medium) → MVP+1

- Polls (Medium, Medium, High, Low) → MVP+1

Create a 2‑week sprint plan:

- Sprint 1: Auth, basic classroom lobby, text chat.

- Sprint 2: A/V join flow with error handling, screen share.

- Sprint 3: Whiteboard, content upload, basic assignments.

- Sprint 4: Recording pipeline, attendance export, polish.

Step 3: Choose the Architecture (Buy, Assemble, or Build)

You have three broad choices:

- Build everything in‑house (WebRTC + SFU + signaling + TURN)

- Pros: Maximum control, potentially lower variable costs at scale.

- Cons: Highest complexity and time‑to‑market; requires specialized media expertise.

- Example stack: mediasoup or Janus SFU, custom Node signaling, coturn for STUN/TURN.

- Assemble using CPaaS/VCaaS components (e.g., Daily, Agora, Twilio, LiveKit Cloud, Jitsi‑as‑a‑Service)

- Pros: Faster to ship, proven global media infrastructure, SDKs across platforms.

- Cons: Per‑minute or per‑participant costs; vendor lock‑in considerations.

- Good for: MVP and early growth; hybrid approach (own everything else, rent media).

- Embed existing conferencing via SDK (e.g., Zoom SDK)

- Pros: Reliability, security, familiar UX; low engineering effort.

- Cons: Less customization; feature roadmap tied to vendor; branding constraints.

Quick cost thought experiment for a class of 25 students:

- CPaaS video cost: Suppose $0.004–$0.007 per participant‑minute. A 60‑minute class with 25 participants → 1500 participant‑minutes → ~$6–$10/class. If you run 1,000 classes/month → ~$6k–$10k.

- Self‑hosted SFU: You pay for compute, egress bandwidth, TURN traffic, ops. Costs vary widely, but beyond a few thousand concurrent participants, self‑hosting may be cheaper if you have media expertise.

Recommendation for most teams: Start with CPaaS to de‑risk and accelerate. Plan a migration path to your own SFU if/when economics or control demands it.

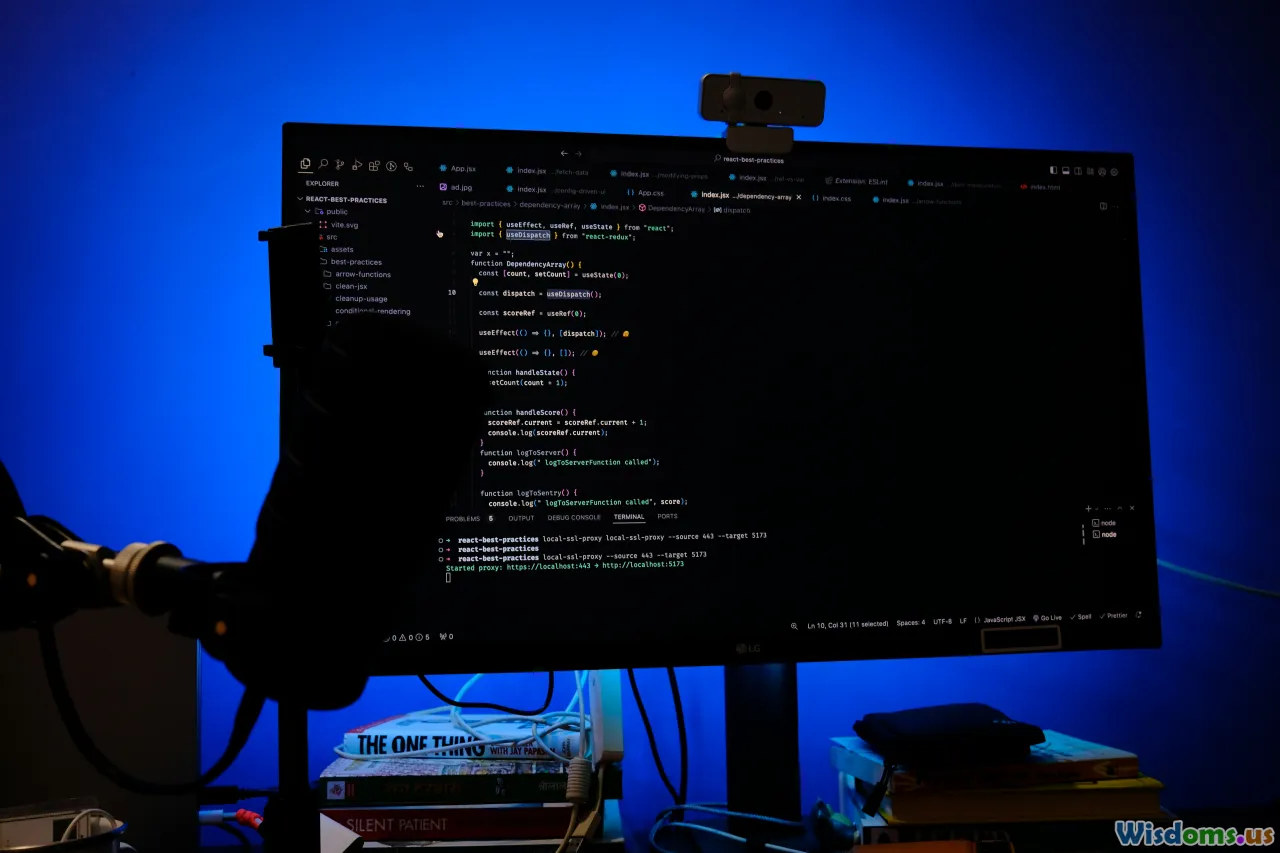

Step 4: Select the Tech Stack

Frontend:

- Framework: React + TypeScript (or Vue/Svelte if your team prefers). Next.js for SSR/SSG where helpful.

- State management: Redux Toolkit or Zustand. For media state, consider a dedicated “call store.”

- Styling: Tailwind CSS or CSS Modules for speed and consistency.

- WebRTC helpers: Use framework‑friendly SDKs if on CPaaS. For self‑build, simple‑peer or native APIs.

- PWA and offline support for assignments, content viewing, and note‑taking.

Backend:

- Language/Framework: Node.js (NestJS/Express) or Python (Django/FastAPI). Both have great ecosystems.

- Database: PostgreSQL for core data; Redis for pub/sub and ephemeral room state.

- Real‑time: WebSocket (e.g., Socket.IO, ws, or raw WebSocket) for signaling and chat.

- File storage: S3‑compatible object store with lifecycle policies.

- Search: Elasticsearch/OpenSearch or Postgres full‑text for content library.

- API design: REST for most endpoints; GraphQL for flexible dashboards.

DevOps/Infra:

- Cloud: AWS/GCP/Azure. Use managed Postgres (RDS/Cloud SQL), managed Redis (ElastiCache/Memorystore).

- CDN: CloudFront/Cloudflare for static assets and recordings.

- Media: CPaaS or self‑hosted SFU + coturn (ports 3478/5349).

- CI/CD: GitHub Actions, GitLab CI, or CircleCI. Blue‑green or canary deploys.

- Observability: Prometheus + Grafana, OpenTelemetry traces, centralized logs (ELK stack).

Data model sketch:

- Users(id, role, name, email, auth_provider, locale)

- Courses(id, title, description, visibility)

- Enrollments(user_id, course_id, role)

- Classes(id, course_id, start_at, end_at, join_url, recording_url)

- Assignments(id, course_id, title, due_at, rubric)

- Submissions(id, assignment_id, user_id, status, grade, feedback)

- Messages(id, class_id, user_id, content, sent_at)

- WhiteboardDocs(id, class_id, snapshot_url, version)

- Attendance(id, class_id, user_id, join_at, leave_at)

Step 5: Design the Classroom UX

Effective virtual classrooms feel calm, predictable, and empowering.

Layout principles:

- Teacher‑first controls: Mute all, spotlight video, lock room, quick poll.

- Attention to cognitive load: Keep critical tools visible; group secondary tools behind a drawer.

- Responsive design: Side panel collapses on mobile; big buttons for touch targets.

- Visibility: Show clear status (recording on, network quality indicators, captioning).

Accessibility baked in:

- Keyboard navigation: Tab order across mic/camera, chat, whiteboard tools.

- Color contrast: Aim for WCAG 2.2 AA; test light/dark modes.

- Caption controls: Toggle captions, adjust font size and background.

Real‑world tweaks that matter:

- Show speaking indicators and “hand raise” at the top.

- Offer “low bandwidth mode” (audio only, thumbnail videos, text‑based whiteboard updates).

- Teacher’s view includes a quick engagement panel: talk time percentages, unread chat, poll responses.

Example flow:

- Student hits “Join,” sees device check modal (mic test with visible audio meter).

- On join, student lands in the classroom with video grid and a dock: Chat, Whiteboard, Files, Participants.

- A persistent “Next steps” strip nudges: “Today’s quiz unlocks at 12:50.”

Step 6: Implement Real‑Time Audio/Video

If you’re assembling or building media, this is the heart of your classroom.

Core WebRTC concepts:

- Peers exchange session descriptions (SDP) via a signaling channel (often WebSocket).

- NAT traversal uses ICE with STUN/TURN servers. STUN discovers public addresses; TURN relays media if direct paths fail.

- Media encryption is on by default via DTLS‑SRTP.

SFU vs MCU vs mesh:

- Mesh: Each client sends media to every other client. Simple but scales poorly past ~4–6 participants.

- MCU: Server mixes streams into a single composite stream. Higher server CPU/bandwidth; clients are light.

- SFU: Server forwards selected streams without mixing. Best balance for classrooms; supports simulcast and SVC.

Practical choices:

- Self‑hosted SFU: mediasoup, Janus, Jitsi Videobridge, LiveKit (open source).

- Managed: Daily, Agora, Twilio, LiveKit Cloud.

- TURN/STUN: coturn; open UDP 3478 and TCP/TLS 5349; set proper realm and long‑term credentials.

Codec considerations:

- Audio: Opus (wideband, variable bitrate, robust to loss). Start at ~24–32 kbps; enable echo cancellation and AGC.

- Video: VP8 for compatibility; VP9/AV1 where supported for quality at lower bitrate; H.264 for hardware acceleration.

Signaling flow example (simplified):

Client A:

- getUserMedia()

- pc = new RTCPeerConnection({ iceServers })

- pc.addTrack(audio)

- offer = await pc.createOffer({ offerToReceiveAudio: true, offerToReceiveVideo: true })

- await pc.setLocalDescription(offer)

- send via WebSocket: { type: "offer", sdp: offer.sdp }

Server (SFU or signaling):

- forward offer to target or negotiate on behalf of SFU

Client B / SFU:

- create answer

- send { type: "answer", sdp }

Client A:

- setRemoteDescription(answer)

- handle onicecandidate events and send candidates via signaling

Simulcast and bandwidth adaptation:

- Publish multiple video layers (e.g., 180p/360p/720p); SFU forwards the right layer based on subscriber’s network.

- Adapt to packet loss and RTT; switch to audio‑only under poor conditions.

Screen sharing:

- Use getDisplayMedia; offer separate audio capture. For browsers that restrict system audio, provide app‑audio guidance.

Recording approaches:

- Server‑side: SFU forks streams to a recorder/mixer; best for reliability.

- Client‑side: MediaRecorder API; less reliable and lacks server trust.

- Always show a “Recording” badge and obtain consent.

Testing tips:

- Simulate bad networks (Chrome DevTools, Linux tc, or NetEm). Test 1%, 3%, 5% packet loss and 200–400 ms RTT.

- Validate devices across Chrome, Firefox, Safari, and Edge; mobile Safari has stricter autoplay policies.

Step 7: Build Interactive Tools (Chat, Whiteboard, Screen Share, Reactions)

Chat:

- Room‑scoped channels with basic formatting, emoji, and attachments.

- Moderation: delete messages, mute users, block links. Keep audit logs.

- Persistence: store messages with minimal PII; apply retention policies.

- Rate‑limit reactions to avoid animation overload.

Whiteboard:

- Start simple: shapes, pen, text, eraser, laser pointer.

- Data model: Represent shapes as JSON; store versions for undo/redo.

- Collaboration engine:

- OT (Operational Transform) or CRDT (e.g., Yjs, Automerge) to handle concurrent edits without conflicts.

- Persist snapshots to S3; keep deltas in Redis for quick room recovery.

- Permissions: Teacher can lock/unlock editing; student annotation mode with layers.

Screen share:

- Provide a picture‑in‑picture or “focus mode.”

- Let teachers pin shared content for all.

- Offer annotation over shared screens where possible.

Reactions and engagement:

- Non‑verbal cues: hand raise, thumbs up/down, slow down/speed up.

- Polls: single/multiple choice, timed; display aggregated results.

Example: Implement collaborative whiteboard with Yjs and WebSocket awareness:

const ydoc = new Y.Doc();

const provider = new WebsocketProvider('wss://ws.example.com', `whiteboard-${classId}`, ydoc);

const yMap = ydoc.getMap('shapes');

// On draw

yMap.set(shapeId, shapePayload);

// On delete

yMap.delete(shapeId);

Step 8: Content Management, Assignments, and Assessments

Content library:

- Upload PDFs, slides, images, and short videos; generate thumbnails and text extraction for search.

- Storage: S3 buckets with lifecycle rules; encode video to HLS for progressive streaming.

- Access control: pre‑signed URLs with short TTLs; per‑course ACLs.

Assignments:

- Define rubric and due dates; support file uploads, links, or text responses.

- Versioning: allow resubmission until due date; keep history.

- Grading: rubric‑based scoring and quick comments; bulk export to CSV.

Quizzes:

- Question types: multiple choice, short answer, numeric, file upload.

- Randomization: shuffle questions and options; draw from a question bank.

- Time limits and accessibility: extended time accommodations by role.

- Cheating mitigation: question pools, different question orders, server‑side timers.

Interoperability:

- LTI 1.3: act as an LTI tool provider so an existing LMS can launch your classroom.

- SCORM/xAPI: import legacy content; emit xAPI statements for attempts and scores.

Example xAPI statement:

{

"actor": { "mbox": "mailto:student@example.com" },

"verb": { "id": "http://adlnet.gov/expapi/verbs/answered", "display": { "en-US": "answered" } },

"object": { "id": "https://example.com/quiz/123/q/5" },

"result": { "success": true, "score": { "scaled": 0.8 } },

"context": { "contextActivities": { "parent": [{ "id": "https://example.com/course/intro" }] } },

"timestamp": "2025-01-01T10:00:00Z"

}

Step 9: Scheduling, Notifications, and Calendars

Scheduling:

- Class creation with time zones; display start time in user’s locale.

- ICS invites: attach to emails so users can add to personal calendars.

- Recurring events: weekly/biweekly patterns with exceptions.

Notifications:

- Email: Use a reliable provider; set SPF/DKIM/DMARC; handle bounces.

- Push: Web push for browsers; APNs/FCM for mobile apps.

- In‑app: Bell icon with unread counts; digest summaries.

Calendar integrations:

- Google/Microsoft calendar via OAuth; ask only for minimal scopes; respect user revocation.

- Sync two‑ways: update event when class time changes and notify participants.

Reliability:

- Job runners (Sidekiq/Bull/Celery) for reminders 24 hours and 10 minutes before class.

- Idempotent jobs to avoid duplicate emails.

Step 10: Analytics and Learning Insights

Start with questions, not charts. What will help teachers teach better and students learn faster?

Key metrics:

- Attendance: join time, leave time, total minutes.

- Engagement: chat messages sent, hand raises, poll participation, whiteboard edits.

- Media quality: average bitrate, packet loss, reconnects; provide MOS‑like indicators.

- Assessments: quiz scores, item difficulty, time per question.

Data pipeline:

- Event collector at the edge; send to Kafka/PubSub or directly to a warehouse for small scale.

- ETL/ELT with dbt; warehouse in BigQuery/Snowflake/Redshift.

- Dashboards: Metabase, Superset, or Looker for aggregated insights.

Privacy and ethics:

- Use differential privacy or aggregation thresholds before exposing class‑level analytics.

- Avoid “individual surveillance dashboards.” Focus on support signals (e.g., who might need outreach).

Step 11: Accessibility and Inclusive Design

Represent accessibility as a first‑class feature, not a backlog chore.

Core practices:

- WCAG 2.2 AA compliance: color contrast, keyboard operability, focus visible, reflow on mobile.

- Captions: Offer live captions via ASR (e.g., cloud speech APIs). Allow transcript download with consent.

- Screen reader support: ARIA labels for controls; announce state changes (recording on/off, hand raised).

- Keyboard shortcuts: Mute/unmute, raise hand, focus chat.

- Bandwidth accommodations: audio‑only mode, text transcripts, low‑res video layers.

Localization:

- i18n for UI strings; date/time/number formats per locale.

- Allow multi‑language captions; let students set their preferred language.

Testing:

- Run automated axe tests; conduct manual keyboard‑only passes; test with NVDA/VoiceOver.

Step 12: Security, Privacy, and Compliance

Authentication and authorization:

- Support email/password with strong password policies; rate limit login attempts.

- SSO: SAML/OIDC for schools and enterprises; SCIM for user provisioning.

- Role‑based access control: Admin/Teacher/Student; fine‑grained permissions on resources.

- Token strategy: Short‑lived access tokens (JWT) with refresh tokens; rotate keys.

Network and app security:

- TLS everywhere; HSTS and secure cookies; CSP to reduce XSS risk; CSRF protection for state‑changing requests.

- Validate uploads (MIME/type, antivirus scanning); store with private ACLs.

- Rate limit join attempts per class; lock rooms after start if needed.

Media security:

- WebRTC uses DTLS‑SRTP; SFUs forward encrypted frames by default. For end‑to‑end encryption, use insertable streams where feasible with feature trade‑offs.

Compliance:

- FERPA: limit disclosure of education records; role‑based visibility.

- COPPA: parental consent for under‑13 users; minimal data collection.

- GDPR: lawful basis, DPA with customers, data retention and deletion, data subject rights workflow.

- Recording consent banners and regional data residency options.

Auditing:

- Immutable audit logs for admin actions and room moderation.

- Alerts on suspicious activity (e.g., bulk download, many failed logins).

Step 13: Performance, Scale, and Reliability

Plan for growth without over‑engineering day one.

Scaling media:

- Horizontal SFU clusters; route participants to nearest region for latency.

- Use a global TURN footprint; cache credentials; monitor relay usage.

- Implement graceful degradation: downgrade video layers before dropping audio.

App scalability:

- Stateless API pods behind a load balancer; sticky sessions only for WebSockets if needed.

- Redis for session/pub‑sub; ensure persistence of critical events to Postgres.

- CDN for static assets; pre‑warm edge caches before major events.

Reliability:

- Health checks and circuit breakers for CPaaS dependencies.

- Error budgets and SLOs (e.g., 99.9% monthly availability for join flow, 99.5% for recording processing).

- Backpressure strategies: limit concurrent publishes, cap max videos on screen.

Monitoring:

- Metrics: join success rate, median/95th join time, ICE failure rate, TURN ratio, packet loss, reconnects.

- Logs: structured JSON; correlation IDs from client to SFU to recorder.

- Traces: instrument critical flows (join, publish, subscribe, record start/stop).

Load testing:

- Use headless browser farms to simulate 50–200 participants; capture client CPU and bandwidth usage.

- Throttle networks to mimic real classrooms; validate SFU CPU headroom.

Step 14: Testing and QA Strategy

Test pyramid:

- Unit tests: utilities, reducers, permission checks.

- Integration tests: WebSocket signaling, DB transactions.

- E2E tests: join flow, chat, whiteboard edits, assignment submission; use Playwright or Cypress.

Media QA:

- Device lab: popular webcams, headsets, Chromebooks, iPads, Android phones.

- Browser matrix: latest two versions of Chrome/Edge/Firefox/Safari.

- Network chaos: emulate jitter, dropouts; ensure reconnection logic is resilient.

Automation examples:

- Synthetic canaries: small bots join a canary room hourly and report MOS‑like metrics.

- Golden recordings: compare waveform/frames to ensure encode settings haven’t regressed.

Step 15: Launch Plan, Pricing, and Operations

Soft launch:

- Recruit a pilot cohort (3–5 classes) with diverse devices and networks.

- Run real sessions with a fallback plan (e.g., backup Zoom link) for the first week.

- Collect structured feedback via a rubric: join reliability, A/V quality, feature usability.

Documentation and training:

- Short video walkthroughs for teachers and students.

- Quick start guides and troubleshooting tips (mic permissions, firewalls).

Support readiness:

- Tiered support: L1 for common issues, L2 for media debugging, L3 engineering.

- Status page; incident response runbooks; on‑call rotation.

Pricing models:

- Per active user/month (simple for schools).

- Per participant‑minute (maps to CPaaS costs if you assemble).

- Course‑based bundles for bootcamps.

Sample CPaaS math:

- If your average class is 15 participants for 60 minutes at $0.006/participant‑minute → $5.40 per class in media cost. Add TURN egress and recordings for a full picture.

Operations:

- Data retention policies (e.g., auto‑delete recordings after 180 days unless pinned).

- Backup/restore drills; test disaster recovery quarterly.

- Post‑incident reviews with clear action items.

Step 16: Roadmap Beyond MVP

Enhancements:

- Breakout rooms with teacher broadcast and timed returns.

- Advanced polling (free‑text word clouds, quizzes with leaderboards).

- Classroom templates (seminar, lab, language drills, pair programming).

- Gamification: badges for attendance streaks and participation.

AI‑assisted features:

- Live summaries and action items after each class (with opt‑in and privacy safeguards).

- Smart search across transcripts, whiteboard snapshots, and files.

- Real‑time translation for captions and chat.

Integrations:

- GitHub Classroom for coding courses; Google Drive/OneDrive for file management.

- SIS integration for roster sync; grade export to LMS.

Appendix: Example Data Models and APIs

Relational tables (PostgreSQL):

CREATE TABLE users (

id UUID PRIMARY KEY,

email TEXT UNIQUE NOT NULL,

password_hash TEXT,

auth_provider TEXT DEFAULT 'local',

role TEXT CHECK (role IN ('admin','teacher','student')) NOT NULL,

name TEXT NOT NULL,

locale TEXT DEFAULT 'en-US',

created_at TIMESTAMPTZ DEFAULT now()

);

CREATE TABLE courses (

id UUID PRIMARY KEY,

title TEXT NOT NULL,

description TEXT,

visibility TEXT CHECK (visibility IN ('private','org','public')) DEFAULT 'private'

);

CREATE TABLE enrollments (

user_id UUID REFERENCES users(id),

course_id UUID REFERENCES courses(id),

role TEXT CHECK (role IN ('teacher','student')) NOT NULL,

PRIMARY KEY (user_id, course_id)

);

CREATE TABLE classes (

id UUID PRIMARY KEY,

course_id UUID REFERENCES courses(id),

start_at TIMESTAMPTZ NOT NULL,

end_at TIMESTAMPTZ,

join_code TEXT UNIQUE,

recording_url TEXT

);

CREATE TABLE attendance (

id UUID PRIMARY KEY,

class_id UUID REFERENCES classes(id),

user_id UUID REFERENCES users(id),

join_at TIMESTAMPTZ,

leave_at TIMESTAMPTZ

);

REST endpoints (sample):

POST /api/v1/auth/login

POST /api/v1/courses { title, description }

POST /api/v1/courses/:id/enroll { userId, role }

POST /api/v1/classes { courseId, startAt }

GET /api/v1/classes/:id/join-token

POST /api/v1/classes/:id/messages { content }

POST /api/v1/assignments { courseId, title, dueAt }

POST /api/v1/assignments/:id/submissions { files[] }

Signaling messages (WebSocket):

// Client → Server

{ "type": "join", "classId": "...", "token": "..." }

{ "type": "offer", "sdp": "..." }

{ "type": "candidate", "candidate": { ... } }

// Server → Client

{ "type": "answer", "sdp": "..." }

{ "type": "participant-joined", "user": { "id": "...", "name": "..." } }

{ "type": "mute", "targetId": "..." }

Troubleshooting: Common Pitfalls and How to Fix Them

- “I can’t turn on my camera” on Safari:

- Likely permissions or insecure context. Ensure HTTPS; prompt with a user gesture. Verify camera isn’t used by another tab/app.

- One‑way audio:

- Inspect ICE candidates; TURN may be needed behind symmetric NAT or corporate firewalls. Verify TURN credentials and TCP/TLS fallback on 443/5349.

- Echo and feedback:

- Enforce echo cancellation; auto‑mute when the same user joins from two devices in proximity. Show a feedback detector warning when input ~= output waveform.

- High CPU on teacher’s machine:

- Limit number of video decodes shown; use active speaker + 6 thumbnails. Prefer hardware‑accelerated codecs when available.

- Whiteboard desync:

- Use CRDTs; reconcile by requesting a fresh snapshot if vector clocks diverge.

- Time drift affecting quiz timers:

- Use server‑synchronized time (NTP; send server time on join); run timers client‑side but validate server‑side.

- Mobile Safari screen share limitations:

- Offer document camera mode or upload‑then‑present as fallback.

- Recordings missing audio:

- Ensure mixed audio track includes remote and local; verify sample rates; handle mono/stereo consistently.

Checklist: A Step‑by‑Step Build Summary

- Define learner outcomes, constraints, and compliance needs.

- Prioritize MVP features: A/V, chat, whiteboard, content, assignments, scheduling.

- Decide architecture: CPaaS vs self‑hosted SFU vs embedded SDK.

- Choose stack: React + TypeScript, Node/Django, Postgres, Redis, S3, WebSocket.

- Design UX for clarity, accessibility, and low cognitive load.

- Implement WebRTC with robust signaling, STUN/TURN, simulcast.

- Build collaborative tools with OT/CRDT, moderation, and permissions.

- Add content library, assignments, quizzes, and interoperable standards (LTI, xAPI).

- Set up scheduling, ICS invites, and multi‑channel notifications.

- Instrument analytics for attendance, engagement, media quality, and assessments.

- Bake in accessibility (WCAG 2.2 AA), captions, keyboard navigation, low‑bandwidth mode.

- Lock down security: SSO, RBAC, encryption, CSP/CSRF, upload scanning, audit logs.

- Plan for scale: SFU clustering, autoscaling, CDN, observability, error budgets.

- Test thoroughly: unit/integration/E2E, media QA, network chaos, synthetic monitors.

- Launch in phases: pilot cohort, support readiness, documentation, incident runbooks.

- Iterate on feedback; plan roadmap for breakouts, advanced polls, AI assistance.

A great virtual classroom is not just a video grid with chat bolted on—it’s a thoughtfully orchestrated learning space where technology serves pedagogy. If you take the time to define your educational goals, choose the right architecture for your stage, and design for inclusivity and resilience, your first release can delight teachers and students alike. Ship the essentials, instrument everything, and iterate quickly. In education, trust is earned through reliability and empathy; your product should embody both.

Rate the Post

User Reviews

Popular Posts